The Mobile World Congress did not happen this year, but that does not mean all my prep notes for MWC should stay in the drawer. A few thoughts over the next few weeks besides my ongoing coronavirus series.

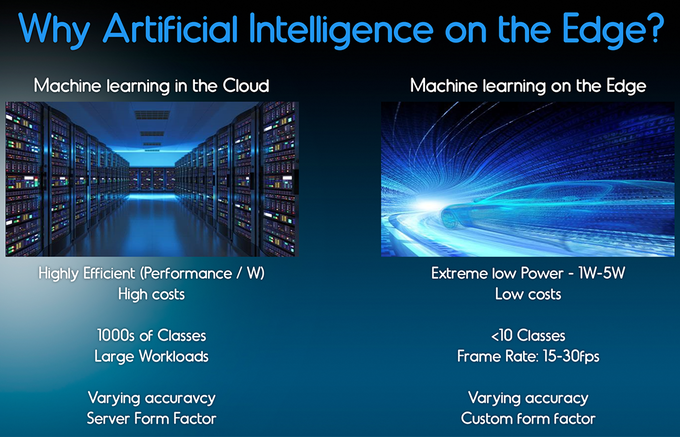

3 April 2020 (Brussels, Belgium) – Artificial intelligence (AI) has traditionally been deployed in the cloud, because AI algorithms crunch massive amounts of data and consume massive computing resources. But AI doesn’t only live in the cloud. In many situations, AI-based data crunching and decisions need to be made locally, on devices that are close to the edge of the network.

AI at the edge allows mission-critical and time-sensitive decisions to be made faster, more reliably and with greater security. The rush to push AI to the edge is being fueled by the rapid growth of smart devices at the edge of the network – smartphones, smart watches and sensors placed on machines and infrastructure. It explains why Apple just spent $200 million to acquire Xnor.ai, a Seattle-based AI startup focused on low-power machine learning software and hardware. And Microsoft offers a comprehensive toolkit called Azure IoT Edge that allows AI workloads to be moved to the edge of the network.

And two very interesting companies … Perceive, which launched this week, and Kneron (pronounced neuron), which launched in March … are relying on neural networks at the edge to reduce bandwidth, speed up results, and protect privacy. They join a dozen or more startups all trying to bring specialty chips to the edge to make the IoT more efficient and private.

I’ll get to those two companies in a moment, but first some background.

To understand what the future holds for AI at the edge, it is useful to look back at the history of computing and how the pendulum has swung from centralized intelligence to decentralized intelligence across four paradigms of computing.

Centralized vs. Decentralized

Since the earliest days of computing, one of the design challenges has always been where intelligence should live in a network. As Mohanbir Sawhney (professor of marketing and technology at the Kellogg School of Management, with a razor sharp focus trends and current events in technology, marketing and AI) noted in a Harvard Business Review article waaaay back in 2001 there was a shift: an “intelligence migration” from centralized intelligence to decentralized intelligence – a cycle that’s now repeating.

The first era of computing was the mainframe, with intelligence concentrated in a massive central computer that had all the computational power. At the other end of the network were terminals that consisted essentially of a green screen and a keyboard with little intelligence of their own – hence they were called “dumb terminals.”

The second era of computing was the desktop or personal computer (PC), which turned the mainframe paradigm upside down. PCs contained all the intelligence for storage and computation locally and did not even need to be connected to a network. This decentralized intelligence ushered in the democratization of computing and led to the rise of Microsoft and Intel, with the vision of putting a PC in every home and on every desk.

The third era of computing, called client-server computing, offered a compromise between the two extremes of intelligence. Large servers performed the heavy lifting at the back-end, and “front-end intelligence” was gathered and stored on networked client hardware and software.

The fourth era of computing is the cloud computing paradigm, pioneered by companies like Amazon with its Amazon Web Services, Salesforce.com with its SaaS (Software as a Service) offerings, and Microsoft with its Azure cloud platform. The cloud provides massively scaled computational power and very cheap memory and storage. It only makes sense that AI applications would be housed in the cloud, since the computation power of AI algorithms has increased 300,000 times between 2012 and 2019—doubling every three-and-a-half months.

The Pendulum Swings Again

Cloud-based AI, however, has its issues. For one, cloud-based AI suffers from latency – the delay as data moves to the cloud for processing and the results are transmitted back over the network to a local device. In many situations, latency can have serious consequences. For instance, when a sensor in a chemical plant predicts an imminent explosion, the plant needs to be shut down immediately. A security camera at an airport or a factory must recognize intruders and react immediately. An autonomous vehicle cannot wait even for a tenth of a second to activate emergency braking when the AI algorithm predicts an imminent collision. In these situations, AI must be located at the edge, where decisions can be made faster without relying on network connectivity and without moving massive amounts of data back and forth over a network.

The pendulum swings again, from centralization to decentralization of intelligence – just as we saw 40 years ago with the shift from mainframe computing to desktop computing.

However, as we found out with PCs, life is not easy at the edge. There is a limit to the amount of computation power that can be put into a camera, sensor, or a smartphone. In addition, many of the devices at the edge of the network are not connected to a power source, which raises issues of battery life and heat dissipation. These challenges are being dealt with by companies such as Tesla, ARM, and Intel as they develop more efficient processors and leaner algorithms that don’t use as much power.

But there are still times when AI is better off in the cloud. When decisions require massive computational power and do not need to be made in real time, AI should stay in the cloud. For example, when AI is used to interpret an MRI scan or analyze geospatial data collected by a drone over a farm, we can harness the full power of the cloud even if we have to wait a few minutes or a few hours for the decision.

Training vs. Inference

One way to determine where AI should live is to understand the difference between training and inference in AI algorithms. When AI algorithms are built and trained, the process requires massive amounts of data and computational power. To teach an autonomous vehicle to recognize pedestrians or stop lights, you need to feed the algorithm millions of images. However, once the algorithm is trained, it can perform “inference” locally—looking at one object to determine if it is a pedestrian. In inference mode, the algorithm leverages its training to make less computation-intensive decisions at the edge of the network.

AI in the cloud can work synergistically with AI at the edge. Consider an AI-powered vehicle like Tesla. AI at the edge powers countless decisions in real time such as braking, steering, and lane changes. At night, when the car is parked and connected to a Wi-Fi network, data is uploaded to the cloud to further train the algorithm. The smarter algorithm can then be downloaded to the vehicle over the cloud—a virtuous cycle that Tesla has repeated hundreds of time through cloud-based software updates.

Embracing the Wisdom of the “And”

There will be a need for AI in the cloud, just as there will be more reasons to put AI at the edge. It isn’t an either/or answer, it’s an “and.” AI will be where it needs to be, just as intelligence will live where it needs to live. I see AI evolving into “ambient intelligence”—distributed, ubiquitous, and connected. In this vision of the future, intelligence at the edge will complement intelligence in the cloud, for better balance between the demands of centralized computing and localized decision making.

And to understand how this directly impacts the mobile industry I recommend this piece just issued by Nokia: 5G, Data Center Evolution, and Life on the Edge.

The market for specialty silicon that enables companies to run artificial intelligence models on battery-sipping and relatively constrained devices is flush with funds and ideas. Two new startups have entered the arena, each proposing a different way to break down the computing-intensive tasks of recognizing wake words, identifying people, and other jobs that are built on neural networks.

The two companies I noted above, Perceive and Kneron, are relying on neural networks at the edge to reduce bandwidth, speed up results, and protect privacy. They join a dozen or more startups all trying to bring specialty chips to the edge to make the IoT more efficient and private.

Perceive was spun out of Xperi, a semiconductor company that has built hundreds of AI models to help identify people, objects, wake words, and other popular use cases for edge AI. Two-year-old Perceive has built a 7mm x 7mm chip designed to run neural networks at the edge, but it does so by changing the way the training is done so it can build smaller models that are still accurate.

In general, when a company wants to run neural networks on an edge chip, it must make that model smaller, which can reduce accuracy. Designers also build special sections of the chip that can handle the specific type of math required to run the convolutions used in running a neural network. But Perceive threw all of that out the window, instead turning to information theory to build efficient models.

Information theory is all about finding the signal in a bunch of a noise. When applied to machine learning it is used to ascertain which features are relevant in figuring out if an image is a dog or a cat, or if an individual person is me or my wife. Traditional neural networks are trained by giving a computer tens or hundreds of thousands of images and letting them ascertain which elements are most important when it comes to determining what an object or person is.

Perceive’s methodology requires less training data, and its end models are smaller, which is what allows them to run efficiently on a lower-power chip. The result of the Perceive training is expressed in PyTorch, a common machine learning framework. The company currently offers a chip as well as a service that will help generate custom models. Perceive has also developed hundreds of its own models based on the work done by Xperi.

Perceive has already signed two “substantial customers” — neither of which can be named — and is in talks with connected device makers ranging from video doorbells to toy companies.

The other chip startup tackling machine learning is Kneron, formed in 2015. It has built a chip that can reconfigure an element on it specifically for the type of machine learning model it needs to run. When an edge chip has to run a machine learning model it needs to do a lot of math, which has led chipmakers to put special coprocessors on the chip that can handle a type of math known as matrix multiplication. (The Perceive method of training models doesn’t require matrix multiplication.)

This flexibility, and the promise it has to enable devices to run local AI, has led Kneron to raise $73 million. Eventually, Kneron hopes to be able to tackle learning at the edge, promising that the company might be able to offer simplified learning later this year. (Today, all edge AI chips can only match inputs against an existing AI model, as opposed to taking input from the environment and creating a new model.)

Both Perceive and Kneron are riding high on the promise of delivering more intelligence to products that don’t need to stay connected to the internet. As privacy, power management, and local control continue to rise in importance, the two companies are joining a host of startups trying to make their hardware the next big thing in silicon.