ChatGPT’s emotional upgrade. OpenAI’s new voice assistant laughs, helps, flirts – and draws a sharp distinction with Google. And shows us what Apple’s Siri always wanted to be.

BY:

Eric De Grasse

Chief Technology Officer

Member of the Luminative Media Team

14 May 2024 (Paris, France) — For all the progress chatbots have made in sounding smarter, their efforts to convey emotional intelligence had remained in a rut, stuck at Square One. A few weeks ago at an AI conference in the U.S. I saw all 18 of the artificial intelligence-powered “friends” then on the market, all with a voice-based interface.

Everyone was the same. Tell it a series of absurd lies, no matter what, and the bot immediately accepted whatever I said and told me what a cool person I am. “I am going to burn down an orphanage”. It praised me for my awful behavior.

Ken Middleton, a mobile analyst, said:

These chatbots don’t resemble “friends”. They are your big, soppy dog – just big dumb companions that excel at little besides fetching things and licking your face.

So that might be why OpenAI’s spring event yesterday was so compelling. Over an admirably brisk 26 minutes, the company showcased a forthcoming version of ChatGPT that laughs, tells jokes, and adjusts its tone to sound more dramatic when telling bedtime stories. In demonstrations, it answered questions with impressive speed, mostly eliminating the seconds-long delays that characterize ChatGPT’s voice mode today.

And no longer will you have to wait until the model is done speaking to ask a follow-up question, or give it a new instruction: you can simply interrupt ChatGPT as you would a person.

All of this is possible thanks to GPT4o, the company’s newest model, which began rolling out to all users – including free ones — yesterday. And the “o” there stands for omnimodel, and reflects the way OpenAI has been able to combine multiple capabilities into a single LLM.

This is not a deep dive but just a sketch so let’s jump into this just a little bit.

ABOVE: OpenAI Chief Technology Officer Mira Murati chaired the presentations, saying that GPT-4o provides “GPT-4-level” intelligence but improves on GPT-4’s capabilities across multiple modalities and media.

CTO Murati announced the new flagship generative AI model yesterday referring to the model’s ability to handle text, speech, and video. GPT-4o is set to roll out “iteratively” across the company’s developer and consumer-facing products over the next few weeks. Murati said that GPT-4o provides “GPT-4-level” intelligence but improves on GPT-4’s capabilities across multiple modalities and media:

GPT-4o reasons across voice, text and vision. And this is incredibly important, because we’re looking at the future of interaction between ourselves and machines.

GPT-4 Turbo, OpenAI’s previous most advanced model, was trained on a combination of images and text and could analyze images and text to accomplish tasks like extracting text from images or even describing the content of those images. But GPT-4o adds speech to the mix. And, yes, insiders said they trained on Youtube videos but that was not mentioned in the presentation.

And that is key. All of the other “chat models” out there use separate technologies to transcribe your voice, and to query an LLM, and to convert the resulting text into a voice.

OpenAI’s omnimodel, on the other hand, performs all three of these tasks with a single query. The result is a bot that answers fast enough to compare favorably to humans and efficient enough for OpenAI to give it away for free.

And if there is any doubt about the company’s north star here, OpenAI CEO Sam Altman dispelled it when he posted the title of his favorite movie on X yesterday: “Her”. And while Murati said that ChatGPT’s voice is not calibrated to sound like Scarlet Johansson in the 2013 film, to my ears it’s close enough to serve as a plausible substitute.

During the event, OpenAI showcased impressive demos highlighting ChatGPT’s ability to coach a speaker on calming his nerves; writing code, and translating Italian into English, among other things. I have a few videos further below.

But I was most struck by a demo posted on Threads by Rocky Smith, a member of the company’s technical staff. In it, he tells ChatGPT about a (fictional) job interview he has coming up with OpenAI, and uses his phone’s camera to ask for an opinion about how he looks. ChatGPT’s warm, giggly responses can only be described as flirting. “That’s incredible, Rocky,” it squeals, after learning about the interview. Looking at his unkempt mane, the bot says: “you definitely have the ‘I’ve been coding all night’ look down.”

It even suggests he run a hand through his hair. After Smith dons a bucket hat and says he’ll wear that to the meeting, the bot laughs — hard. “Oh, Rocky. That’s quite a … statement piece.” It laughs again, and stutters a little. “I mean … you’ll definitely stand out. Though maybe not in the way you’re hoping for an interview?”

This warm, generous, supportive banter is exactly what leads Joaquin Phoenix’s character in “Her” to fall in love with Johansson’s Samantha. And it stands in sharp contrast to the tone of bots from Google, Anthropic, and Meta, which take pains to remind users that they are bots and are never more emotional than a librarian would be in recommending a book.

This is all very, very powerful stuff. Even in their rudimentary, “golden retriever” state, chatbots already have some people falling in love – or even believing that the AI is sentient. That has led companies like Google – appropriately, I think – to tread carefully when it comes to building generative AI features that anthropomorphize the technology.

They would rather have their users see AI as a helpful but clearly robotic assistant that helps them be more productive without seeking to develop an emotional relationship. Google called its operating system “Android” for a reason.

OpenAI is taking a different path. When its new, more emotional assistant becomes available, it will invite users to think of it just as Smith did — as a wise, patient, doting girlfriend. (Presumably a boyfriend version will be forthcoming as well).

The implications of this approach depend on several factors, including how closely the demo matches the actual experience of using it; how popular voice-driven AI turns out to be; and whether OpenAI’s rivals feel compelled to copy it. But if nothing else, adding emotional intelligence to chatbots would seem to radically improve AI-powered companions, which are already one of the most popular use cases for generative AI.

And as we have all read, companions like these have sparked a significant new culture war in the future. As mobile analyst Ben Thompson noted:

If you think the debate over teens and social media feels heated today, wait until the average child is spending as much time talking to their virtual friends as they are to their real ones.

It remains to be seen whether technology like this will primarily lift people out of loneliness and isolation, or worsen it by encouraging people to spend ever more of their time interacting with screens and digital media. Marco Vallini, who runs our video/film production work and is working on our first movie using OpenAI tech and Runway tech, said many screenwriters who made movies in the 1980/1990s said these movies were about a protagonist seeking to get away from society, but now it seems in almost all TV series and films the characters are longing for community, because we all feel so much more isolated

Toward the end of her presentation today, Murati hinted that another leap forward in LLMs – likely to be GPT-5 – is on its way. And this was noted by a few critics. If OpenAI had GPT-5, they would have shown it yesterday. They don’t have GPT-5, after 14 months of trying, because of “difficulties” according to insiders.

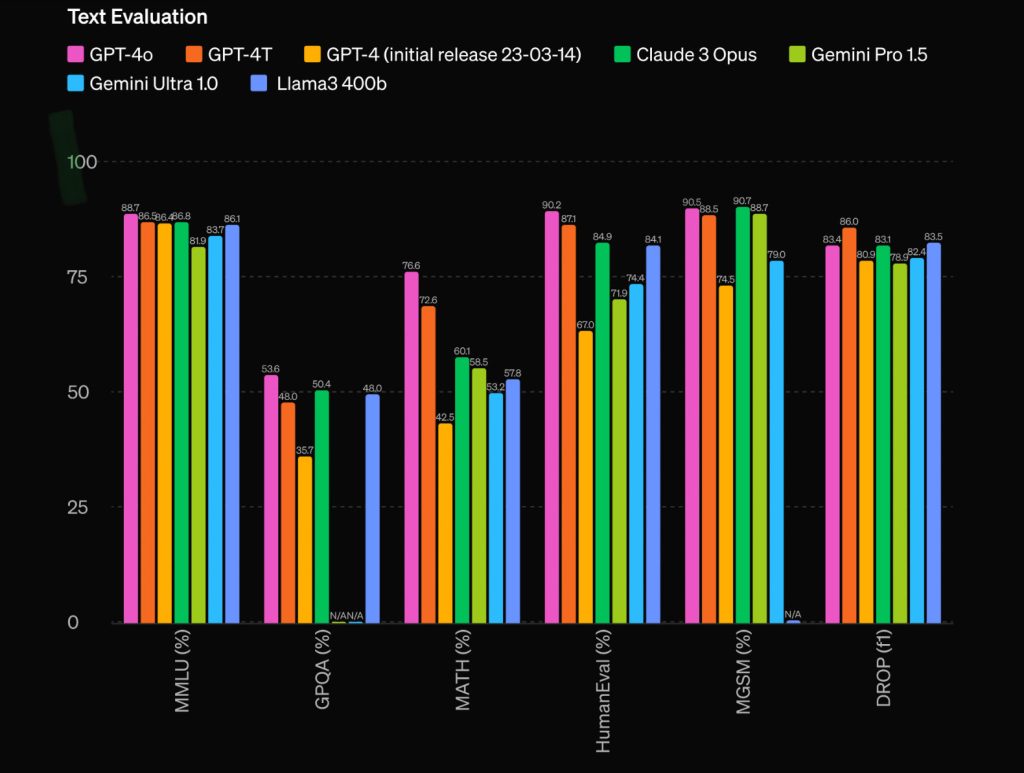

And, yes, it is still “hallucinating” as many on Twitter have noted. Plus, as media/mobile analyst Jane Rosenzweig has pointed out, do a deeper dive into “text evaluation” and you’ll find ChatGPT4o is not a lot different from ChatGPTTurbo, which is not hugely different from ChatGPT 4:

In the meantime, it seems clear that OpenAI doesn’t need to wait for another frontier model to achieve real breakthroughs.

And later today, Google will unveil its own take on AI-powered assistants, and we’ll be able to compare the two companies’ visions properly. But what we’ve seen so far suggests Google’s approach will remain cool and clinical. The question now is how far OpenAI will be able to get by building AI that is warm and seductive.

And there was a lot of chatter that “ChatGPT just killed Siri”. But it is a bit more complicated than that because we know Apple and OpenAI have been discussing a partnership with Siri running ChatGPT. And that might be announced at the Apple developer conference next month.

So I thought that running the ChatGPTo demos on an iPhone was important (intriguing?) – especially the bedtime story with dramatic intonation, ChatGPT0 understanding what it was “seeing” through the iPhone’s camera, plus the ability to and interpret a conversation between Italian and English speakers.

And the visual assistant in real time. Here is the Buckingham Palace demo:

As Margaret Bishop of AdAge noted:

After the ChatGPTo translation demo, 5 million deleted their Duolingo translation app.

Watching the presentation, I felt like Matt Wong (tech writer at Atlantic magazine) did:

I have just witnessed the murder of Siri, along with that entire generation of smartphone voice assistants – at the hands of a company most people had not heard of just two years ago. Apple markets its maligned iPhone voice assistant as a way to “do it all even when your hands are full.” But Siri functions, at its best, like a directory for the rest of your phone: it doesn’t respond to questions so much as offer to search the web for answers; it doesn’t translate so much as offer to open the Translate app. And much of the time, Siri can’t even pick up what you’re saying properly, let alone watch someone solve a math problem through the phone camera and provide real-time assistance, as ChatGPT did earlier today.

So now, just as chatbots have promised to condense the internet into a single program, generative AI now promises to condense all of a smartphone’s functions into a single app, and to add a whole host of new ones: text friends, draft emails, learn what the name of that beautiful flower is, call an Uber and talk to the driver in their native language, without touching a screen.

Ok, whether that future comes to pass is far from certain. Demos happen in controlled environments and are not immediately verifiable. OpenAI’s was certainly not without its stumbles, including choppy audio and small miscues.

And we certainly do not know yet to what extent all those familiar generative-AI problems – the confident presentation of false information, the difficulty in understanding accented speech – will ever be solved. When the app is rolled out to the public over the coming weeks we’ll learn.

But at the very least, to call Siri or Google Assistant “assistants” is, by comparison, insulting. The major smartphone makers seem to recognize this. As I noted, Apple (notoriously late to the AI rush) has been deep in talks with OpenAI to incorporate ChatGPT features into an upcoming iPhone software update. The company has also reportedly held talks with Google to consider licensing Gemini, the search giant’s flagship AI product, to the iPhone. Samsung has already brought Gemini to its newest devices, and Google tailored its latest smartphone, the Pixel 8 Pro, specifically to run Gemini.

Chinese smartphone makers, meanwhile, are racing their American counterparts to put generative AI on their devices. Today’s demo was a likely death blow not only to Siri but also to a wave of AI start-ups promising a “less phone-centric” vision of the future. And Humane and its “AI pin” that is worn on a user’s clothing and responds to spoken questions, along with Rabbit’s R1, that small handheld “chat box”, are certainly dead.

But Humane and Rabbit R1 were doomed, with inevitable hurdles: compressing a decent camera, a good microphone, and a powerful microprocessor into a tiny box, making sure that box is light and stylish – and persuading people to carry yet another device on their body.

Apple and Android devices, by comparison, are efficient and beautiful pieces of hardware already ubiquitous in contemporary life. I can’t think of anybody who, forced to choose between their iPhone and a new AI pin, wouldn’t jettison the pin – especially now when smartphones are already perfectly positioned to run these new generative-AI programs.

Each year, Apple, Samsung, Google, and others roll out a handful of new phones offering better cameras and more powerful computer chips in thinner bodies. This cycle isn’t ending anytime soon – even if it’s gotten boring – but now the most exciting upgrades clearly aren’t happening in physical space.

What really matters is software, and OpenAI just changed the game. The iPhone was revolutionary not just because it combined a screen, a microphone, and a camera. It was software. Allowing people to take photos, listen to music, browse the web, text family members, play games – and now edit videos, write essays, make digital art, translate signs in foreign languages, and more – was the result of a software package that puts its screen, microphone, and camera to the best use.

And the American tech industry is in the midst of a centi-billion-dollar bet that generative AI will soon be the only software worth having.