Yesterday, the Facebook whistleblower, Frances Haugen, testified before a U.S. Senate committee. I recorded it and watched the whole thing. She repeated much of what we heard in her “60 Minutes” interview over the weekend: Facebook has been lying to the public about making significant progress against hate, violence and misinformation.

But Haugen’s testimony had tunnel vision. She got a lot right and a lot wrong so I want to step back a bit and establish some fundamentals.

6 October 2021 (Athens, Greece) – For more than three hours yesterday, Facebook whistleblower Frances Haugen testified before a subcommittee of U.S. Senate Commerce Committee. She was calm. She was confident. She was in control. She said the company should declare “moral bankruptcy” noting:

“This is not simply a matter of certain social media users being angry or unstable, or about one side being radicalized against the other. It is Facebook choosing to grow at all costs, becoming an almost trillion dollar company by buying its profits with our safety.”

The Senate largely ate it up. Long frustrated by Facebook’s size and power — and, one suspects, by its own inability to address those issues in any constructive way — senators yielded the floor to Haugen to make her case. Ultimately, Haugen said little on Tuesday that wasn’t previously known, either because she said it on “60 Minutes” or it was previously covered in the Wall Street Journal series. What she might have done, though, is to finally galvanize support in Congress for meaningful tech regulation.

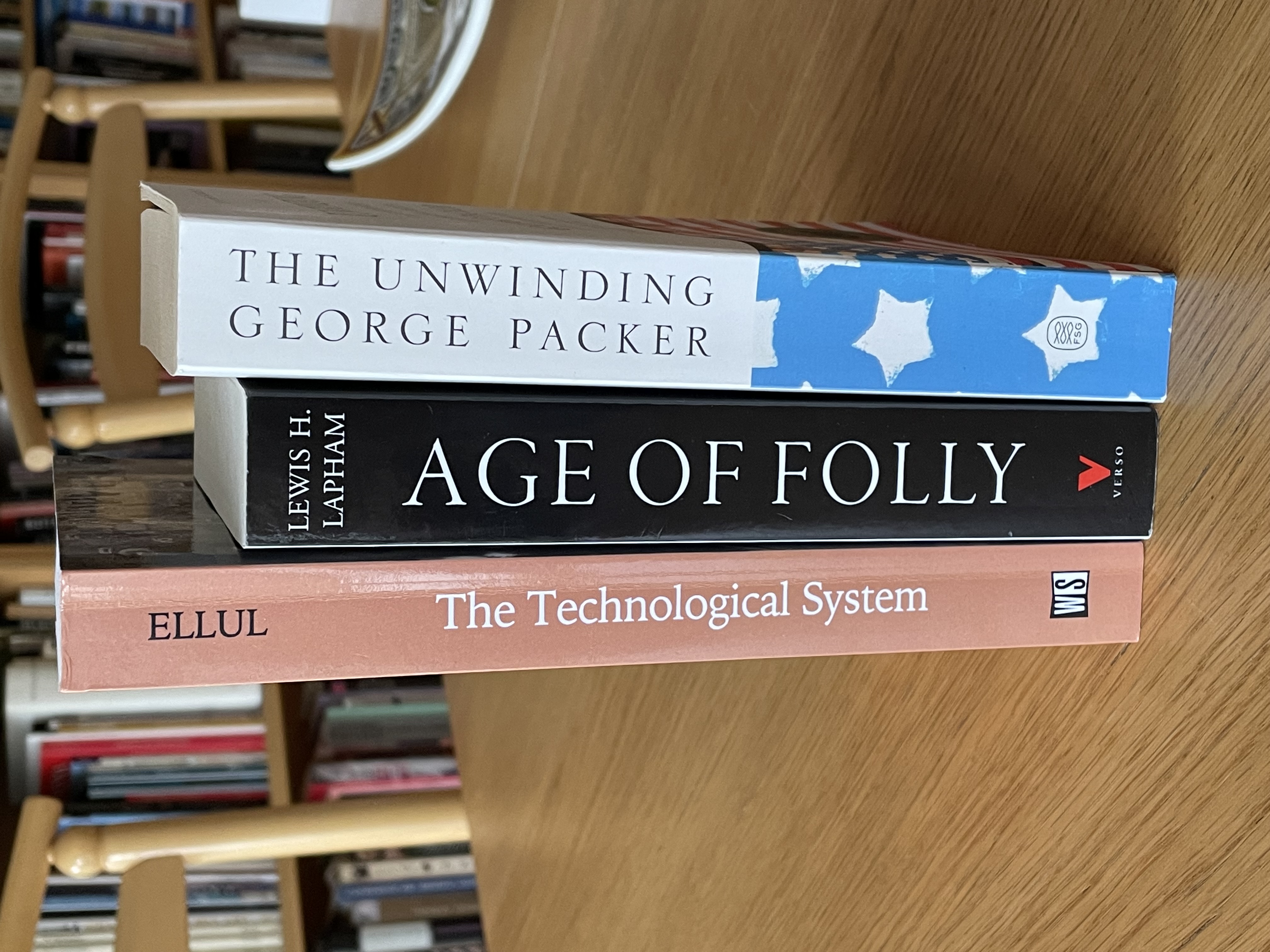

But for all its positive aspects, Haugen’s testimony had some unfortunate aspects as well. For me, Haugen’s testimony had tunnel vision. Those who opine about social networks it seems are in this forever-conversation about attempting to solve society-level problems at the level of “the social media/news feed”. But you need to bring a lot of other other subjects into the conversation. Subjects like, how the U.S. was growing polarized long before the arrival of social networks. Or the research showing that long-term Fox News viewership tends to shift people’s political opinions more than Facebook usage. You need to see the long-term historical development of how we got here by reading a few seminal books:

Yes, it is possible to consider a subject from so many angles that you find yourself paralyzed. But it’s equally paralyzing to begin your effort to rein in Big Tech with the assumption that if you can only “fix” Facebook, you’ll fix society as well.

So I want to step back and suggest you read (I know, like you have the time) the following essay by Joseph Bernstein.

Joseph Bernstein is a Soviet-born Israeli mathematician working at Tel Aviv University. He works in algebraic geometry, representation theory, and number theory. I met him a few years ago at the DLD Tel Aviv Innovation Festival, an annual event for companies, start-ups, investors, entrepreneurs and more: a peek at what’s coming next. It’s technology you will eventually read about first in “MIT Technology Review” and “arXiv Papers” before it hits the mainstream tech media.

At the end of the day, it can be difficult to explain why the disinformation frame has become so dominant. Like the French sociologist, Jacques Ellul, who wrote a landmark study of propaganda in 1962, I dismiss the common view that propaganda and misinformation is the work of a few evil men, seducers of the people. Bernstein notes Ellul in this essay. I’ve read all of Ellul’s works. But that difficulty might be explained by two factors according to Bernstein:

Because one reason to grant Silicon Valley’s assumptions about our mechanistic persuadability is that it prevents us from thinking too hard about the role we play in taking up and believing the things we want to believe. It turns a huge question about the nature of democracy in the digital age – what if the people believe crazy things, and now everyone knows it? – into a technocratic negotiation between tech companies, media companies, think tanks, and universities.

And at the end of the day, it’s possible that the Establishment simply needs the theater of social-media persuasion to build a political world that still makes sense, to explain Brexit and Trump and the loss of faith in the decaying institutions of the West.

Later this week I will write my more detailed take on Haugen’s testimony and what I think is really happening with Facebook. But for the moment, I suggest the following brilliant essay which will take you through all those angles on disinformation I noted above.

Selling the story of disinformation

Joseph Bernstein

In the beginning, there were ABC, NBC, and CBS, and they were good. Midcentury American man could come home after eight hours of work and turn on his television and know where he stood in relation to his wife, and his children, and his neighbors, and his town, and his country, and his world. And that was good. Or he could open the local paper in the morning in the ritual fashion, taking his civic communion with his coffee, and know that identical scenes were unfolding in households across the country.

Over frequencies our American never tuned in to, red-baiting, ultra-right-wing radio preachers hyperventilated to millions. In magazines and books he didn’t read, elites fretted at great length about the dislocating effects of television. And for people who didn’t look like him, the media had hardly anything to say at all. But our man lived in an Eden, not because it was unspoiled, but because he hadn’t considered any other state of affairs. For him, information was in its right—that is to say, unquestioned—place. And that was good, too.

Today, we are lapsed. We understand the media through a metaphor—“the information ecosystem”—which suggests to the American subject that she occupies a hopelessly denatured habitat. Every time she logs on to Facebook or YouTube or Twitter, she encounters the toxic byproducts of modernity as fast as her fingers can scroll. Here is hate speech, foreign interference, and trolling; there are lies about the sizes of inauguration crowds, the origins of pandemics, and the outcomes of elections.

She looks out at her fellow citizens and sees them as contaminated, like tufted coastal animals after an oil spill, with “disinformation” and “misinformation.” She can’t quite define these terms, but she feels that they define the world, online and, increasingly, off.

Everyone scrounges this wasteland for tainted morsels of content, and it’s impossible to know exactly what anyone else has found, in what condition, and in what order. Nevertheless, our American is sure that what her fellow citizens are reading and watching is bad. According to a 2019 Pew survey, half of Americans think that “made-up news/info” is “a very big problem in the country today,” about on par with the “U.S. political system,” the “gap between rich and poor,” and “violent crime.” But she is most worried about disinformation, because it seems so new, and because so new, so isolable, and because so isolable, so fixable. It has something to do, she knows, with the algorithm.

What is to be done with all the bad content? In March, the Aspen Institute announced that it would convene an exquisitely nonpartisan Commission on Information Disorder, co-chaired by Katie Couric, which would “deliver recommendations for how the country can respond to this modern-day crisis of faith in key institutions.” The fifteen commissioners include Yasmin Green, the director of research and development for Jigsaw, a technology incubator within Google that “explores threats to open societies”; Garry Kasparov, the chess champion and Kremlin critic; Alex Stamos, formerly Facebook’s chief security officer and now the director of the Stanford Internet Observatory; Kathryn Murdoch, Rupert Murdoch’s estranged daughter-in-law; and Prince Harry, Prince Charles’s estranged son. Among the commission’s goals is to determine “how government, private industry, and civil society can work together . . . to engage disaffected populations who have lost faith in evidence-based reality,” faith being a well-known prerequisite for evidence-based reality.

The Commission on Information Disorder is the latest (and most creepily named) addition to a new field of knowledge production that emerged during the Trump years at the juncture of media, academia, and policy research: Big Disinfo. A kind of EPA for content, it seeks to expose the spread of various sorts of “toxicity” on social-media platforms, the downstream effects of this spread, and the platforms’ clumsy, dishonest, and half-hearted attempts to halt it. As an environmental cleanup project, it presumes a harm model of content consumption. Just as, say, smoking causes cancer, consuming bad information must cause changes in belief or behavior that are bad, by some standard. Otherwise, why care what people read and watch?

Big Disinfo has found energetic support from the highest echelons of the American political center, which has been warning of an existential content crisis more or less constantly since the 2016 election. To take only the most recent example: in May, Hillary Clinton told the former Tory leader Lord Hague that “there must be a reckoning by the tech companies for the role that they play in undermining the information ecosystem that is absolutely essential for the functioning of any democracy.”

Somewhat surprisingly, Big Tech agrees. Compared with other, more literally toxic corporate giants, those in the tech industry have been rather quick to concede the role they played in corrupting the allegedly pure stream of American reality. Only five years ago, Mark Zuckerberg said it was a “pretty crazy idea” that bad content on his website had persuaded enough voters to swing the 2016 election to Donald Trump. “Voters make decisions based on their lived experience,” he said. “There is a profound lack of empathy in asserting that the only reason someone could have voted the way they did is because they saw fake news.” A year later, suddenly chastened, he apologized for being glib and pledged to do his part to thwart those who “spread misinformation.”

Denial was always untenable, for Zuckerberg in particular. The so-called techlash, a season of belatedly brutal media coverage and political pressure in the aftermath of Brexit and Trump’s win, made it difficult. But Facebook’s basic business pitch made denial impossible. Zuckerberg’s company profits by convincing advertisers that it can standardize its audience for commercial persuasion. How could it simultaneously claim that people aren’t persuaded by its content? Ironically, it turned out that the big social-media platforms shared a foundational premise with their strongest critics in the disinformation field: that platforms have a unique power to influence users, in profound and measurable ways. Over the past five years, these critics helped shatter Silicon Valley’s myth of civic benevolence, while burnishing its image as the ultra-rational overseer of a consumerist future.

Behold, the platforms and their most prominent critics both proclaim: hundreds of millions of Americans in an endless grid, ready for manipulation, ready for activation. Want to change an output—say, an insurrection, or a culture of vaccine skepticism? Change your input. Want to solve the “crisis of faith in key institutions” and the “loss of faith in evidence-based reality”? Adopt a better content-moderation policy. The fix, you see, has something to do with the algorithm.

In the run-up to the 1952 presidential election, a group of Republican donors were concerned about Dwight Eisenhower’s wooden public image. They turned to a Madison Avenue ad firm, Ted Bates, to create commercials for the exciting new device that was suddenly in millions of households. In Eisenhower Answers America, the first series of political spots in television history, a strenuously grinning Ike gave pithy answers to questions about the IRS, the Korean War, and the national debt. The ads marked the beginning of mass marketing in American politics. They also introduced ad-industry logic into the American political imagination: the idea that the right combination of images and words, presented in the right format, can predictably persuade people to act, or not act.

This mechanistic view of humanity was not without its skeptics. “The psychological premise of human manipulability,” Hannah Arendt wrote, “has become one of the chief wares that are sold on the market of common and learned opinion.” To her point, Eisenhower, who carried 442 electoral votes in 1952, would have likely won even if he hadn’t spent a dime on TV.

What was needed to quell doubts about the efficacy of advertising among people who buy ads was empirical proof, or at least the appearance thereof. Modern political persuasion, the sociologist Jacques Ellul wrote in his landmark 1962 study of propaganda, is defined by its aspirations to scientific rigor, “the increasing attempt to control its use, measure its results, define its effects.” Customers seek persuasion that audiences have been persuaded.

Luckily for the aspiring Cold War propagandist, the American ad industry had polished up a pitch. It had spent the first half of the century trying to substantiate its worth through association with the burgeoning fields of scientific management and laboratory psychology. Cultivating behavioral scientists and appropriating their jargon, writes the economist Zoe Sherman, allowed ad sellers to offer “a veneer of scientific certainty” to the art of persuasion:

They asserted that audiences, like the workers in a Taylorized workplace, need not be persuaded through reason, but could be trained through repetition to adopt the new consumption habits desired by the sellers.

The profitable relationship between the ad industry and the soft sciences took on a dark cast in 1957, when the journalist Vance Packard published The Hidden Persuaders, his exposé of “motivation research”—then the bleeding edge of collaboration between Madison Avenue and research psychology. The alarming public image Packard’s bestseller created—ad men wielding some unholy concoction of Pavlov and Freud to manipulate the American public into buying toothpaste—is still with us today. And the idea of the manipulability of the public is, as Arendt noted, an indispensable part of the product. Advertising is targeted at consumers, but sold to businesses.

Packard’s reporting was based on what motivation researchers told him. Among their own motivations, hardly hidden, was a desire to appear clairvoyant. In a late chapter, Packard admits as much:

Some of the researchers were sometimes prone to oversell themselves—or in a sense to exploit the exploiters. John Dollard, [a] Yale psychologist doing consulting work for industry, chided some of his colleagues by saying that those who promise advertisers “a mild form of omnipotence are well received.”

Today, an even greater aura of omnipotence surrounds the digital ad maker than did his print and broadcast forebears. According to Tim Hwang, a lawyer who formerly led public policy at Google, this image is maintained by two “pillars of faith”: that digital ads are both more measurable and more effective than other forms of commercial persuasion. The asset that structures digital advertising is attention. But, Hwang argues in his 2020 book Subprime Attention Crisis, attention is harder to standardize, and thus worth much less as a commodity, than the people buying it seem to think. An “illusion of greater transparency” offered to ad buyers hides a “deeply opaque” marketplace, automated and packaged in unseen ways and dominated by two grimly secretive companies, Facebook and Google, with every interest in making attention seem as uniform as possible. This is perhaps the deepest criticism one can make of these Silicon Valley giants: not that their gleaming industrial information process creates nasty runoff, but that nothing all that valuable is coming out of the factory in the first place.

Look closer and it’s clear that much of the attention for sale on the internet is haphazard, unmeasurable, or simply fraudulent. Hwang points out that despite being exposed to an enormous amount of online advertising, the public is largely apathetic toward it. More than that, online ads tend to produce clicks among people who are already loyal customers. This is, as Hwang puts it, “an expensive way of attracting users who would have purchased anyway.” Mistaking correlation for causation has given ad buyers a wildly exaggerated sense of their ability to persuade.

So too has the all-important consumer data on which targeted advertising is based, and which research has exposed as frequently shoddy or overstated. In recently unsealed court documents, Facebook managers disparaged the quality of their own ad targeting for just this reason. An internal Facebook email suggests that COO Sheryl Sandberg knew for years that the company was overstating the reach of its ads.

Why, then, do buyers love digital advertising so much? In many cases, Hwang concludes, it’s simply because it looks good at a meeting, blown up on an analytics dashboard: “It makes for great theater.” In other words, the digital-advertising industry relies on our perception of its ability to persuade as much as on any measurement of its ability to actually do so. This is a matter of public relations, of storytelling. And here, the disinformation frame has been a great asset.

The myths of the digital-advertising industry have played a defining role in the way the critics of Big Tech tell the story of political persuasion. That’s because paid political content is the kind of digital mis- and disinformation with the highest profile—the nefarious influence that liberal observers across the West blamed for Brexit and Trump. Like any really compelling narrative, this one has good guys and bad guys. The heroes in the disinformation drama are people like Christopher Wylie, who blew the whistle on the black magic of Cambridge Analytica, then asked the world to buy his book. The villains are people like Brad Parscale, the flamboyant strategist who, at six feet eight inches, could not have hidden himself from the press even if he wanted to, which he absolutely did not.

The digital director of Trump’s successful 2016 campaign, Parscale was bumped up to campaign manager for the reelection bid. Sensing that this idiosyncratically bearded man was the secret architect of Trump’s supposed digital dominance, the press turned him into a Sith Lord of right-wing persuasion, a master of the misinformation force. A March 2020 New Yorker profile of Parscale touted him as “the man behind Trump’s Facebook juggernaut,” who had “used social media to sway the 2016 election” and was “poised to do it again.” Parscale played the role with kayfabian glee, tweeting in May:

For nearly three years we have been building a juggernaut campaign (Death Star). It is firing on all cylinders. Data, Digital, TV, Political, Surrogates, Coalitions, etc. In a few days we start pressing FIRE for the first time.

Barely two months later, ahead of criticism that Parscale didn’t know how to handle the offline elements of a campaign, Trump demoted him. Two months after that, police officers detained the great manipulator, shirtless and bloated, outside his South Florida mansion, where he had loaded a pistol during an argument with his wife. After an involuntary hospitalization, he resigned from the campaign, citing “overwhelming stress.” (He has since started a new digital political consultancy.)

The media narrative of sinister digital mind control has obscured a body of research that is skeptical about the effects of political advertising and disinformation. A 2019 examination of thousands of Facebook users by political scientists at Princeton and NYU found that “sharing articles from fake news domains was a rare activity”—more than 90 percent of users had never shared any. A 2017 Stanford and NYU study concluded that

if one fake news article were about as persuasive as one TV campaign ad, the fake news in our database would have changed vote shares by an amount on the order of hundredths of a percentage point. This is much smaller than Trump’s margin of victory in the pivotal states on which the outcome depended.

Not that these studies should be takenas definitive proof of anything. Despite its prominence in the media, the study of disinformation is still in the process of answering definitional questions and hasn’t begun to reckon with some basic epistemological issues.

The most comprehensive survey of the field to date, a 2018 scientific literature review titled “Social Media, Political Polarization, and Political Disinformation,” reveals some gobsmacking deficits. The authors fault disinformation research for failing to explain why opinions change; lacking solid data on the prevalence and reach of disinformation; and declining to establish common definitions for the most important terms in the field, including disinformation, misinformation, online propaganda, hyperpartisan news, fake news, clickbait, rumors, and conspiracy theories. The sense prevails that no two people who research disinformation are talking about quite the same thing.

This will ring true to anyone who follows the current media discussion around online propaganda. “Misinformation” and “disinformation” are used casually and interchangeably to refer to an enormous range of content, ranging from well-worn scams to viral news aggregation; from foreign-intelligence operations to trolling; from opposition research to harassment. In their crudest use, the terms are simply jargon for “things I disagree with.” Attempts to define “disinformation” broadly enough as to rinse it of political perspective or ideology leave us in territory so abstract as to be absurd. As the literature review put it:

“Disinformation” is intended to be a broad category describing the types of information that one could encounter online that could possibly lead to misperceptions about the actual state of the world.

That narrows it down!

The term has always been political and belligerent. When dezinformatsiyaappeared as an entry in the 1952 Great Soviet Encyclopedia, its meaning was ruthlessly ideological: “Dissemination (in the press, on the radio, etc.) of false reports intended to mislead public opinion. The capitalist press and radio make wide use of dezinformatsiya.” Today, journalists, academics, and politicians still frame the disinformation issue in martial language, as a “war on truth” or “weaponized lies.” In the new context, however, bad information is a weapon wielded in an occasionally violent domestic political conflict rather than a cold war between superpowers.

Because the standards of the new field of study are so murky, the popular understanding of the persuasive effects of bad information has become overly dependent on anecdata about “rabbit holes” that privilege the role of novel technology over social, cultural, economic, and political context. (There are echoes of Cold War brainwashing fears here.) These stories of persuasion are, like the story of online advertising, plagued by the difficulty of disentangling correlation from causation. Is social media creating new types of people, or simply revealing long-obscured types of people to a segment of the public unaccustomed to seeing them? The latter possibility has embarrassing implications for the media and academia alike.

An even more vexing issue for the disinformation field, though, is the supposedly objective stance media researchers and journalists take toward the information ecosystem to which they themselves belong. Somewhat amazingly, this attempt has taken place alongside an agonizing and overdue questioning within the media of the harm done by unexamined professional standards of objectivity. Like journalism, scholarship, and all other forms of knowledge creation, disinformation research reflects the culture, aspirations, and assumptions of its creators. A quick scan of the institutions that publish most frequently and influentially about disinformation: Harvard University, the New York Times,Stanford University, MIT, NBC, the Atlantic Council, the Council on Foreign Relations, etc. That the most prestigious liberal institutions of the pre-digital age are the most invested in fighting disinformation reveals a lot about what they stand to lose, or hope to regain. Whatever the brilliance of the individual disinformation researchers and reporters, the nature of the project inevitably places them in a regrettably defensive position in the contemporary debate about media representation, objectivity, image-making, and public knowledge. However well-intentioned these professionals are, they don’t have special access to the fabric of reality.

This spring, in light of new reporting and a renewed, bipartisan political effort to investigate the origins of COVID-19, Facebook announced that it would no longer remove posts that claimed that the coronavirus was man-made or manufactured. Many disinformation workers, who spent months calling for social-media companies to ban such claims on the grounds that they were conspiracy theories, have been awkwardly silent as scientists have begun to admit that an accidental leak from a Wuhan lab is an unlikely, but plausible, possibility.

Still, Big Disinfo can barely contain its desire to hand the power of disseminating knowledge back to a set of “objective” gatekeepers. In February, the tech news website Recode reported on a planned $65 million nonpartisan news initiative called the Project for Good Information. Its creator, Tara McGowan, is a veteran Democratic operative and the CEO of Acronym, a center-left digital-advertising and voter-mobilization nonprofit whose PAC is funded by, among others, Steven Spielberg, the LinkedIn co-founder Reid Hoffman, and the venture capitalist Michael Moritz. The former Obama campaign manager David Plouffe, currently a strategist at the Chan Zuckerberg Initiative, is an official Acronym adviser. Meanwhile, a February New York Times article humbly suggested the appointment of a “reality czar” who could “become the tip of the spear for the federal government’s response to the reality crisis.”

The vision of a godlike scientist bestriding the media on behalf of the U.S. government is almost a century old. After the First World War, the academic study of propaganda was explicitly progressive and reformist, seeking to expose the role of powerful interests in shaping the news. Then, in the late 1930s, the Rockefeller Foundation began sponsoring evangelists of a new discipline called communication research. The psychologists, political scientists, and consultants behind this movement touted their methodological sophistication and absolute political neutrality. They hawked Arendt’s “psychological premise of human manipulability” to government officials and businessmen, much as the early television ad executives had. They put themselves in the service of the state.

The media scholar Jack Bratich has argued that the contemporary antidisinformation industry is part of a “war of restoration” fought by an American political center humbled by the economic and political crises of the past twenty years. Depoliticized civil society becomes, per Bratich, “the terrain for the restoration of authoritative truth-tellers” like, well, Harvard, the New York Times, and the Council on Foreign Relations. In this argument, the Establishment has turned its methods for discrediting the information of its geopolitical enemies against its own citizens. The Biden Administration’s National Strategy for Countering Domestic Terrorism—the first of its kind—promises to “counter the polarization often fueled by disinformation, misinformation, and dangerous conspiracy theories online.” The full report warned not just of right-wing militias and incels, but anticapitalist, environmental, and animal-rights activists too. This comes as governments around the world have started using emergency “fake news” and “disinformation” laws to harass and arrest dissidents and reporters.

One needn’t buy into Bratich’s story, however, to understand what tech companies and select media organizations all stand to gain from the Big Disinfo worldview. The content giants—Facebook, Twitter, Google—have tried for years to leverage the credibility and expertise of certain forms of journalism through fact-checking and media-literacy initiatives. In this context, the disinformation project is simply an unofficial partnership between Big Tech, corporate media, elite universities, and cash-rich foundations. Indeed, over the past few years, some journalists have started to grouse that their jobs now consist of fact-checking the very same social platforms that are vaporizing their industry.

Ironically, to the extent that this work creates undue alarm about disinformation, it supports Facebook’s sales pitch. What could be more appealing to an advertiser, after all, than a machine that can persuade anyone of anything? This understanding benefits Facebook, which spreads more bad information, which creates more alarm. Legacy outlets with usefully prestigious brands are taken on board as trusted partners, to determine when the levels of contamination in the information ecosystem (from which they have magically detached themselves) get too high. For the old media institutions, it’s a bid for relevance, a form of self-preservation. For the tech platforms, it’s a superficial strategy to avoid deeper questions. A trusted disinformation field is, in this sense, a very useful thing for Mark Zuckerberg.

And to what effect? Last year, Facebook started putting warning labels on Trump’s misinformative and disinformative posts. BuzzFeed News reported in November that the labels reduced sharing by only 8 percent. It was almost as if the vast majority of people who spread what Trump posted didn’t care whether a third party had rated his speech unreliable. (In fact, one wonders if, to a certain type of person, such a warning might even be an inducement to share.) Facebook could say that it had listened to critics, and what’s more, it could point to numbers indicating that it had cleaned up the information ecosystem by 8 percent. Its critics, having been listened to, could stand there with their hands in their pockets.

As the virus seized the world last year, a new, epidemiological metaphor for bad information suggested itself. Dis- and misinformation were no longer exogenous toxins but contagious organisms, producing persuasion upon exposure as inevitably as cough or fever. In a perfect inversion of the language of digital-media hype, “going viral” was now a bad thing. In October, Anne Applebaum proclaimed in The Atlantic that Trump was a “super-spreader of disinformation.” A study earlier that month by researchers at Cornell found that 38 percent of the English-language “misinformation conversation” around COVID-19 involved some mention of Trump, making him, per the New York Times, “the largest driver of the ‘infodemic.’”

This finding resonated with earlier research suggesting that disinformation typically needs the support of political and media elites to spread widely. That is to say, the persuasiveness of information on social platforms depends on context. Propaganda doesn’t show up out of nowhere, and it doesn’t all work the same way. Ellul wrote of the necessary role of what he called “pre-propaganda”:

Direct propaganda, aimed at modifying opinions and attitudes, must be preceded by propaganda that is sociological in character, slow, general, seeking to create a climate, an atmosphere of favorable preliminary attitudes. No direct propaganda can be effective without pre-propaganda, which, without direct or noticeable aggression, is limited to creating ambiguities, reducing prejudices, and spreading images, apparently without purpose.

Another way of thinking about pre-propaganda is as the entire social, cultural, political, and historical context. In the United States, that context includes an idiosyncratic electoral process and a two-party system that has asymmetrically polarized toward a nativist, rhetorically anti-elite right wing. It also includes a libertarian social ethic, a “paranoid style,” an “indigenous American berserk,” a deeply irresponsible national broadcast media, disappearing local news, an entertainment industry that glorifies violence, a bloated military, massive income inequality, a history of brutal and intractable racism that has time and again shattered class consciousness, conspiratorial habits of mind, and themes of world-historical declension and redemption. The specific American situation was creating specific kinds of people long before the advent of tech platforms.

To take the whole environment into view, or as much of it as we can, is to see how preposterously insufficient it is to blame these platforms for the sad extremities of our national life, up to and including the riot on January 6. And yet, given the technological determinism of the disinformation discourse, is it any surprise that attorneys for some of the Capitol rioters are planning legal defenses that blame social-media companies?

Only certain types of people respond to certain types of propaganda in certain situations. The best reporting on QAnon, for example, has taken into account the conspiracy movement’s popularity among white evangelicals. The best reporting about vaccine and mask skepticism has taken into account the mosaic of experiences that form the American attitude toward the expertise of public-health authorities. There is nothing magically persuasive about social-media platforms; they are a new and important part of the picture, but far from the whole thing. Facebook, however much Mark Zuckerberg and Sheryl Sandberg might wish us to think so, is not the unmoved mover.

For anyone who has used Facebook recently, that should be obvious. Facebook is full of ugly memes and boring groups, ignorant arguments, sensational clickbait, products no one wants, and vestigial features no one cares about. And yet the people most alarmed about Facebook’s negative influence are those who complain the most about how bad a product Facebook is. The question is: Why do disinformation workers think they are the only ones who have noticed that Facebook stinks? Why should we suppose the rest of the world has been hypnotized by it? Why have we been so eager to accept Silicon Valley’s story about how easy we are to manipulate?

Within the knowledge-making professions there are some sympathetic structural explanations. Social scientists get funding for research projects that might show up in the news. Think tanks want to study quantifiable policy problems. Journalists strive to expose powerful hypocrites and create “impact.” Indeed, the tech platforms are so inept and so easily caught violating their own rules about verboten information that a generation of ambitious reporters has found an inexhaustible vein of hypocrisy through stories about disinformation leading to moderation. As a matter of policy, it’s much easier to focus on an adjustable algorithm than entrenched social conditions.

Yet professional incentives only go so far in explaining why the disinformation frame has become so dominant. Ellul dismissed a “common view of propaganda . . . that it is the work of a few evil men, seducers of the people.” He compared this simplistic story to midcentury studies of advertising “which regard the buyer as victim and prey.” Instead, he wrote, the propagandist and the propagandee make propaganda together.

One reason to grant Silicon Valley’s assumptions about our mechanistic persuadability is that it prevents us from thinking too hard about the role we play in taking up and believing the things we want to believe. It turns a huge question about the nature of democracy in the digital age—what if the people believe crazy things, and now everyone knows it?—into a technocratic negotiation between tech companies, media companies, think tanks, and universities.

But there is a deeper and related reason many critics of Big Tech are so quick to accept the technologist’s story about human persuadability. As the political scientist Yaron Ezrahi has noted, the public relies on scientific and technological demonstrations of political cause and effect because they sustain our belief in the rationality of democratic government.

Indeed, it’s possible that the Establishment needs the theater of social-media persuasion to build a political world that still makes sense, to explain Brexit and Trump and the loss of faith in the decaying institutions of the West. The ruptures that emerged across much of the democratic world five years ago called into question the basic assumptions of so many of the participants in this debate—the social-media executives, the scholars, the journalists, the think tankers, the pollsters. A common account of social media’s persuasive effects provides a convenient explanation for how so many people thought so wrongly at more or less the same time. More than that, it creates a world of persuasion that is legible and useful to capital—to advertisers, political consultants, media companies, and of course, to the tech platforms themselves. It is a model of cause and effect in which the information circulated by a few corporations has the total power to justify the beliefs and behaviors of the demos. In a way, this world is a kind of comfort. Easy to explain, easy to tweak, and easy to sell, it is a worthy successor to the unified vision of American life produced by twentieth-century television. It is not, as Mark Zuckerberg said, “a crazy idea.” Especially if we all believe it.