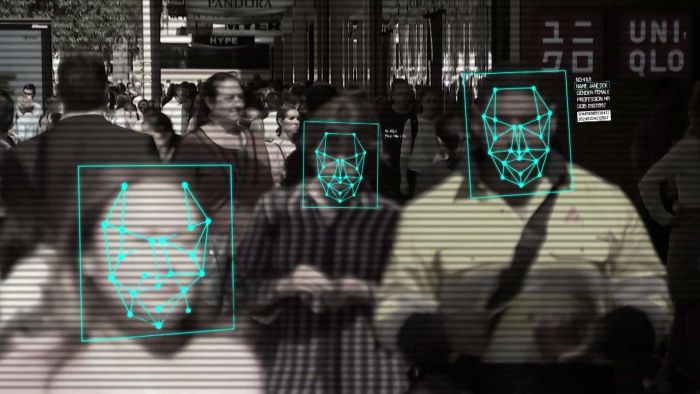

Facial recognition technology is probably not what we wanted as a society. But it’s way too late for that discussion.

29 July 2021 – Facial recognition software is not just for law enforcement anymore. Israel-based firm AnyVision’s clients include retail stores, hospitals, casinos, sports stadiums, and banks. Even schools are using the software to track minors with, it appears, nary a concern for their privacy. We learn this and more from “This Manual for a Popular Facial Recognition Tool Shows Just How Much the Software Tracks People”. Writer Alfred Ng reports that AnyVision’s 2019 user guide reveals the software logs and analyzes all faces that appear on camera, not only those belonging to persons of interest. A representative boasted that, during a week-long pilot program at the Santa Fe Independent School District in Texas, the software logged over 164,000 detections and picked up one student 1100 times.

There are a couple privacy features built in, but they are not turned on by default. “Privacy Mode” only logs faces of those on a watch list and “GDPR Mode” blurs non-watch listed faces on playbacks and downloads. (Of course, what is blurred can be easily unblurred.) Whether a client uses those options depends on its use case and, importantly, local privacy regulations. Ng observes:

“The growth of facial recognition has raised privacy and civil liberties concerns over the technology’s ability to constantly monitor people and track their movements. In June, the European Data Protection Board and the European Data Protection Supervisor called for a facial recognition ban in public spaces, warning that ‘deploying remote biometric identification in publicly accessible spaces means the end of anonymity in those places.’ Lawmakers, privacy advocates, and civil rights organizations have also pushed against facial recognition because of error rates that disproportionately hurt people of color.

A 2018 research paper from Joy Buolamwini and Timnit Gebru highlighted how facial recognition technology from companies like Microsoft and IBM is consistently less accurate in identifying people of color and women. In December 2019, the National Institute of Standards and Technology also found that the majority of facial recognition algorithms exhibit more false positives against people of color. There have been at least three cases of a wrongful arrest of a Black man based on facial recognition.”

Schools that have implemented facial recognition software say it is an effort to prevent school shootings, a laudable goal. However, once in place it is tempting to use it for less urgent matters. Ng reports the Texas City Independent School District has used it to identify one student who was licking a security camera and to have another removed from his sister’s graduation because he had been expelled. As Georgetown University’s Clare Garvie points out:

“The mission creep issue is a real concern when you initially build out a system to find that one person who’s been suspended and is incredibly dangerous, and all of a sudden you’ve enrolled all student photos and can track them wherever they go. You’ve built a system that’s essentially like putting an ankle monitor on all your kids.”

Last weekend the lead story in the weekly newsletter for our TMT (technology, media, and telecom) sector subscribers was about facial recognition technology. I noted that some of the most popular stores in the U.S. – including Macy’s and Albertsons – are using facial recognition on their customers, largely without their knowledge. An organization called “Fight for the Future” has launched a nationwide campaign to document which retailers are deploying facial recognition, and which ones have committed to not use the technology. It is trying to draw attention to retail stores using facial-scanning algorithms to boost their profits, intensify security systems, and even track their employees.

And it’s another clear reminder of what we are talking about in this post: that the reach of facial recognition goes far beyond law enforcement and into the private, commercial storefronts we regularly visit. We are in a sphere of technology that is largely unregulated and undisclosed, meaning both customers and employees may be unaware this software is surveilling and collecting data about them. I posit a lot of people would probably be surprised to know how many retailers that they shop in on a regular basis are using this technology in a variety of ways to protect their profits and maximize their profits as well.

But let’s be clear: stores using facial recognition isn’t a new practice. Last year, Reuters reported that the U.S. drug chain Rite Aid had deployed facial recognition in at least 200 stores over 12 years ago before suddenly ditching the software. In fact, facial recognition is just one of several technologies store chains are deploying to enhance their security systems, or to otherwise surveil customers. Some retailers, for instance, have used apps and in-store wifi to track users while they move around physical stores and later target them with online ads.

Regulation? Well, that’s a problem. Yes, one of the main challenges is that facial recognition is mostly unregulated, but almost all current efforts to rein in the technology primarily focus on its use by government and law enforcement, not commercial use. And as I noted last weekend, the laws and spheres are so different it would be impossible to write a clean, clearly understood bill regulating both consumer and government. And while members of Congress have proposed several ideas for giving customers more protection against private companies’ use of facial recognition, there’s yet to be significant regulation at the federal level. In the vast majority of cities and towns, there are no rules on when private companies can use surveillance tech, and when they can share the information with police, or ICE (Immigration and Customs Enforcement), or even private ads.

And in Europe? Oh, the irony. The EU, (mistakenly) considered to have the most stringent data protection rules in the world, opened the barn doors. The EU is now facing a backlash over those new proposed artificial intelligence regulation rules it announced this past April. The rules allow for a limited use of facial recognition by authorities – but critics (and even the techno class that has diced the details agree) the carveouts will usher in a new age of biometric surveillance.

NOTE: as my TMT subscribers know from my briefing paper on the AI proposals published in April, the European Commission sought to strike a compromise between ensuring the privacy of citizens and placating governments who say they need the tech to fight terrorism and crime. The rules nominally prohibit biometric identification systems like facial recognition in public places for police use — unless in the case of “serious crimes,” which the Commission specified could mean cases related to terrorism, but which critics warn is such a vague term that it can (and will) open the door for all kinds of surveillance based on spurious threats. It also doesn’t mention anything about corporations using the technology in public places.

The entire proposal is a hodgepodge of vague terms and a complete misunderstanding of how AI works. It is a cacophony of confusion.

No, facial recognition technology is probably not what we wanted as a society. But it’s way too late for that discussion.