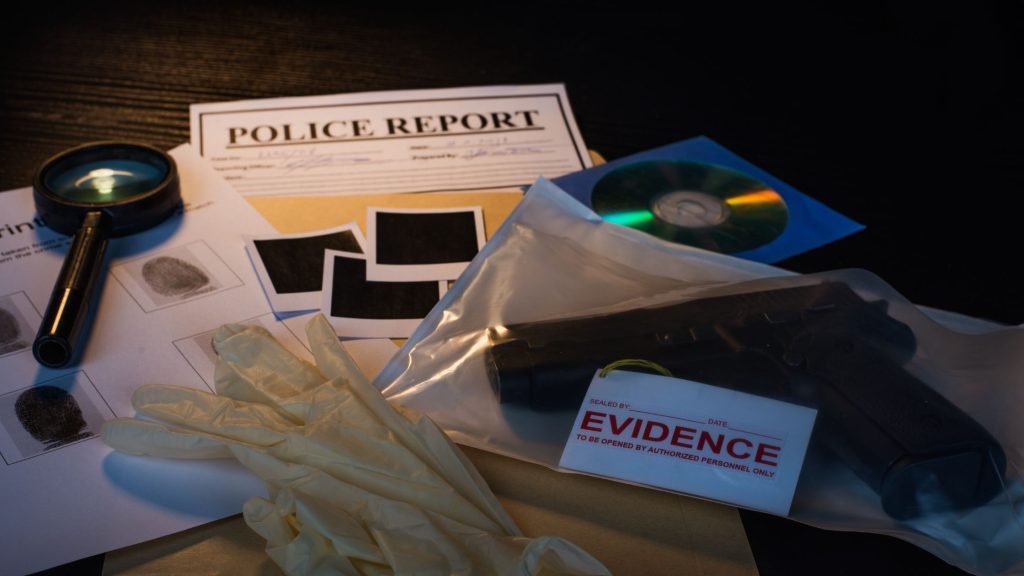

The company that created it says its AI will help get more police out of the office and onto the streets.

Critics worry it’ll make cops lazy and potentially introduce errors into crucial criminal evidence.

24 April 2024 – Back in 2010 and 2011 I was involved in many aspects of war crimes trials and human rights abuse cases involving the international criminal tribunals convened for the former Yugoslavia, a response to the mass atrocities and war crimes against various ethnic groups taking place in Bosnia, Croatia, Kosovo, Macedonia and Serbia. My primary work was on the e-discovery/staffing side, providing specialized attorneys for managing the accumulation/organization of war crimes evidence, etc. But that morphed into coverage of the trials themselves through my media company, Luminative Media. It was a cascade of activities and events.

And it afforded me the opportunity to do a deep dive into all manner of forensics.

And a shout out: most of what I learned about computer and digital forensics came from an intensive 10-week course offered by Stroz Friedberg (now part of the Aon risk management/insurance brokerage group). But I did not really begin to understand forensics until I started to read Craig Ball. I highly recommend you add him to your “must read” list.

My war crimes investigation work led to a more intensive study of genocide which has consumed my last 6 years and which has led me to generate 7 videos and also a steady stream of blog posts.

And it included getting into the world of criminal forensics, and what remains – forensic exhumation in the wake of political violence as part of the judicial process for justice. Forensic exhumation for human rights really started in Latin America, and then that knowledge got shared from Latin American practitioners to the rest of the world. You get to see how things really are on the ground, that reality is really messy. Painful crimes, with very dedicated forensic exhumation teams. It is a brutal story and when time permits I will write an expanded post.

I rarely jump back into the world of eDiscovery and information analysis and evidence. But sometimes my daily news feed pulls me back in when something pops into my “forensics news box” as happened this morning.

American cops are increasingly leaning on artificial intelligence to assist with policing, from AI models that analyze criminal patterns to drones that can fly themselves.

Now, a GPT-4 powered AI can do one of their less appealing jobs: filing paperwork.

As reported by Forbes magazine and Ars Technica, Axon (the $22 billion police contractor best known for manufacturing the Taser electric weapon) has launched a new tool called Draft One that it says can transcribe audio from body cameras and automatically turn it into a police report. Cops can then review the document to ensure accuracy. In a press statement, Axon claims one early tester of the tool, the Fort Collins Colorado Police Department, has seen an 82% decrease in time spent writing reports:

If an officer spends half their day reporting, and we can cut that in half, we have an opportunity to potentially free up 25% of an officer’s time to be back out policing.

BUT … these reports are often used as evidence in criminal trials, and critics are concerned that relying on AI could put people at risk by depending on language models that are known to “hallucinate,” or make things up, as well as display racial bias, either blatantly or unconsciously. Dave Maass, surveillance technologies investigations director at the Electronic Frontier Foundation:

It’s kind of a nightmare. Police, who aren’t specialists in AI, and aren’t going to be specialists in recognizing the problems with AI, are going to use these systems to generate language that could affect millions of people in their involvement with the criminal justice system. Gee – what could go wrong?

An Axon representative acknowledged there are dangers:

When people talk about bias in AI, it really is: Is this going to exacerbate racism by taking training data that’s going to treat people differently? That was the main risk.

Axon quickly came out with a recommendation: police should not use the AI to write reports for incidents as serious as a police shooting, where vital information could be missed:

An officer-involved shooting is likely a scenario where it would not be used, and we’d advise people against it, because there’s so much complexity, the stakes are so high.

The company noted that some early customers saw some “issues” and have only using Draft One for misdemeanours, though others are writing up “more significant incidents,” including use-of-force cases. Axon, however, won’t have control over how individual police departments use the tools.

The senior principal AI product manager at Axon told the Forbes reporter that to counter racial or other biases, the company has configured its AI, based on OpenAI’s GPT-4 Turbo model, so it sticks to the facts of what’s being recorded:

The simplest way to think about it is that we have turned off the creativity. That dramatically reduces the number of hallucinations and mistakes. Everything that it’s produced is just based on that transcript and that transcript alone.

The product manager also noted that Axon ran a test where the company took existing body cam transcripts, and in each scenario only changed the suspect’s race – for example swapping out the word “white” for “Black” or “Latino” – and ran it through the AI model. In testing, the generated police reports showed no “statistically significant differences across races,” for hundreds of samples.

In demos shown to both Forbes and Ars Technica, the company uploaded sample body cam footage. In the video, an officer is talking to a bystander named Marcus at a playground, who is describing a suspect harassing a family. The man describes the suspect as wearing a green jacket, and says he’s about “my height.” The software then generates a short 5-paragraph narrative describing the situation. It included:

Marcus stated that the incident occurred roughly 20 minutes prior to our conversation and that the suspect was last seen hiding behind the slide at the park.

The AI model also flagged areas where the officer needed to add more context to the report, such as Marcus’ height for reference.

The company said that the AI tool will come with an audit trail that lists out all the actions that any user took, so police agencies can ensure a report has been reviewed and validated. All of the information is stored and processed on Microsoft Azure cloud servers.

One interesting comment, from Daniel Linskey who is a former Boston Police Department Superintendent-in-Chief and who now heads the financial and risk consultancy Kroll’s Boston division:

If police are going to use artificial intelligence for drafting reports, departments will need clearly defined policies, procedures and supervision. You are going to need to make very, very sure the audit process is real. If … if … those rules are followed, AI could be a real time saver. It can get more police on the street, rather than in an office doing admin. And hopefully it makes cops more proactive and not lazy. Cops in police stations aren’t keeping people safe.

This week’s product launch also comes after a year in which reports have raised questions about Axon’s company culture. Reuters reported some staff felt pressured to be tasered in front of other employees and get corporate tattoos as a way of proving their commitment to the company. Said a company representative:

The things they were criticizing us for are generally things we are proud of. Our recruiting numbers went up when they ran those recent stories.

Reuters also reported that the company’s “origin story”, in which the head of the company was inspired to found a police tech company after some high school friends were shot and killed, was largely a myth, claiming that he wasn’t, in fact, close to the victims. He was forced to admit that he “wasn’t close to the victims, but did know them”.

The reports haven’t dampened Axon’s stock, which hit a record high of over $316 per share in March. In February, it reported $1.5 billion in revenue for 2023 and a net income of $174 million.

And the Forbes reporter noted this week that a group of cities, including Baltimore and Augusta, believe Axon’s growth has come via market abuses. In a lawsuit filed earlier this month, they claim Axon came to dominate the body cam market and then increased the prices unfairly. Axon believes the allegations are unfounded and should be dismissed.

Bottom line? You know that all this stuff … the original body cams, the AI-generated summaries, the audit trail, just everything … are going to be evidence, and that’s going to be a whole new mess.