A short trip down the rabbit hole of algorithmic outputs and the structure of speech.

7 June 2022 – Over the past few years I’ve had the opportunity to read numerous books and long-form essays that question mankind’s relation to the machine – best friend or worst enemy, saving grace or engine of doom? Such questions have been with us since James Watt’s introduction of the first efficient steam engine in the same year that Thomas Jefferson wrote the Declaration of Independence. My primary authors over the last 10 years have been Charles Arthur, Ugo Bardi, Wendy Chun, Jacques Ellul, Lewis Lapham, Donella Meadows, Maël Renouard, Matt Stoller, Simon Winchester – and Henry Kissinger. Yes, THAT Henry Kissinger.

But lately these questions have been fortified with the not entirely fanciful notion that machine-made intelligence, now freed like a genie from its bottle, and still writhing and squirming through its birth pangs (yes, these are still the very early years of machine learning and AI), will soon begin to grow phenomenally, will assume the role of some fiendish Rex Imperator, and will ultimately come to rule us all, for always, and with no evident existential benefit to the sorry and vulnerable weakness that is humankind.

As most of my regular readers know, one of my favorite current authors to add to the list above is Jennifer Petersen (currently an associate professor of communication at the USC Annenberg School for Communication and Journalism in Los Angeles) whom has written extensively on the history of free speech law, the social and economic implications of our newest communication technologies, and the growth of corporate personhood to Artificial Intelligence.

Her newest book is How Machines Came to Speak: Media Technologies and Freedom of Speech (just out this past April) which I had a chance to finish over the weekend. She has given a few presentations on the book as part of the “Age of the Cognitive” series organized by Aeon, the MIT Media Lab and Nature magazine.

NOTE: this was part of the same series that presented the work of Austin Clyde, John Cook, and Stephan Lewandowsky who showed that the psychological drivers of misinformation belief will always resist correction so misinformation is just something we need to live with. C’est la vie.

Let’s start with the obvious. Recommendation systems permeate our online lives. They structure what we buy, read, watch, listen to, even what we think. Though the inner workings of these algorithmic systems remain hidden from us, we encounter them across almost all digital platforms. Google, Amazon, Netflix, Facebook, Alibaba, Twitter, TikTok, Instagram, Spotify and myriad others use recommendation systems to direct us to certain types of content and products. We see the results of these systems any time we try to search for something or are presented with an ordered list of options, often with a ranking and hierarchy. But what exactly are these lists?

Well, you might think of them as mere “data”.

NOTE: there is no such thing as “data”. That’s tomorrow’s essay 😎

You might suspect they are the preferred choices of a company, brand or search engine. You might think of them as reflections of the archived search histories of countless other users. You may even suspect that they’re based on records of your own purchases, or particular words and syntax from your previous search queries. But would you ever think of these lists of recommendations as opinions – the utterances of an algorithm, or the company that created it?

In her new book, Petersen explains that recommendation systems pose difficult questions about what it means to speak, and whether speaking is something that only a person does. How do we draw a line between expressions and actions? And who (or what) can be considered a ‘speaker’? In her work, she examines how such questions have been debated and decided in law. What she has found has surprised her: machines are reshaping how speech is regulated and defined, and they’re determining who has a voice in the public sphere. This is the case with search results and other algorithmic expressions. But can these recommendations really be considered “opinions” or “expressions”? Untangling such problems raises broader questions about the changing nature of speech itself: in a public sphere populated by human, corporate and algorithmic utterances, what exactly does it mean to speak?

Companies like Google have argued that their search results are not data, nor a commercial product, nor an action. Instead, they’re a form of speech or opinion, like that of a newspaper editor. Google has done this, in part, for pragmatic reasons. Within the U.S., opinions are a form of expression constitutionally protected from regulation and other lawsuits, which is why so much hinges on whether a particular activity is understood as an example of “speech’” or “expression”. If search results are “speech”’, then the companies that produce them are insulated from governmental regulation and protected from a range of legal threats, including accusations of anti-competitive practices, allegations of libel, or other complaints from competitors, content creators or users. This is why Google prefers that you – or rather, the U.S. legal system – think of its search results as opinions.

From this perspective, characterising algorithmic outputs and decisions as opinions may seem like spin, an attempt by companies to game the system and find the most advantageous set of precedents. But there is also something much deeper going on in these debates. Google and other tech companies have made these characterisations because their search results really do resemble opinions – but there are important differences, too. That is, these “opinions” overlap with human judgments and statements but are not equal to them. We do not yet have a good way of talking and thinking about this type of ‘speech’ or ‘expression’. Says Petersen:

We may be tempted to use words like ‘opinion’ to describe the ‘speech’ of search results because algorithmic outputs share some similarities with human opinions and expression. Algorithms are constructed by humans and involve ideas, beliefs and value judgments. Search algorithms, for example, involve human decisions about how to define relevance and quality in search results, and how to operationalise these definitions. This happens, for example, when an algorithm’s creators must decide whether quality is best defined by many links to a website or by links from highly recognised and reputable sites. In this way, creators are engaging in high-level decision-making that resembles editorial decisions about newsworthiness. Even so, there are important differences between algorithmic outputs and how ‘speech’ is understood.

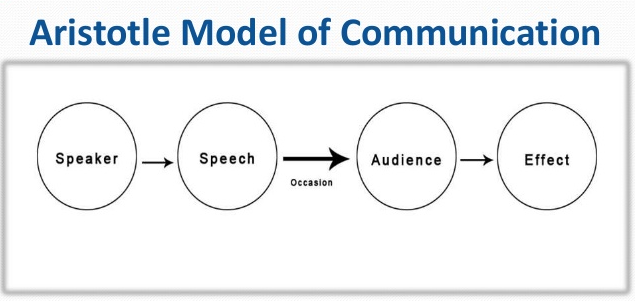

Speech is often defined in ways that connote human consciousness, intentionality, personality and agency. When we talk about speech, we use terms such as “opinion”, “judgment”, “utterance”, “advocacy”, “persuasion”, “conscience”, “beliefs” and “feelings”. These terms have a rich history in humanist thought, as well as in legal reasoning about freedom of speech. Traditionally, speaking has involved an individual externalising an idea or feeling through spoken or written words, images, music or even dress, gesture, dance and other actions.

This is reflected in law: legal protections for freedom of speech in the U.S. include actions such as saluting (or burning) a flag, wearing armbands, sitting or standing in silent protest, naked dancing, watching movies, playing phonographs, and more. In all these examples, the act is an expression because it conveys to others some form of mental activity or belief held by the person doing the “expressing”. This is the traditional, liberal conception of what it means to speak. In this tradition, speech or speaking are closely tied to what it means to be a person and a participant in a political community. We express this idea when we refer to “speaking subjects” or an individual or group ‘having a voice’. Petersen:

Algorithmic outputs do not quite fit this definition. They may be shaped by the values, intentions and beliefs of their creators, but they are not equal to them. They display what I call a distinct structure of speech. They do not involve a speaker who wishes to express a particular idea, opinion or belief (as in a typical conversation, publication or expression of opinion). In the complex algorithmic systems that characterise much of our current media landscape, the chains of causality and intent between creators of the algorithm and its outputs are often attenuated or indirect. Problems appear when we try to think about these outputs as expressions of intentionality, agency or judgments – that is, like the liberal conception of speech.

The outputs of algorithmic systems are shaped by, but not reducible to, the ideas, judgments, beliefs or values of their designers. Search results do not reflect or express what either Google or its engineers think about your search query. Instead, these results reflect what they think about how to define relevance and quality in search queries in general. As such, we can’t simply describe these outputs as the expressions of their human creators. In fact, in many such systems, designers are not the only sources of judgment and values. Complex algorithmic systems are dynamic, and the outputs of these systems depend on multiple and often changing streams of data drawn from crowdsourced online activity. As the political geographer (and algorithm expert) Louise Amoore puts it in her book Cloud Ethics “each and every one of us” is conscripted into writing these systems. We do so without intent, knowledge or coordination.

Another example from Google helps to illustrate this dynamic, in which the company claimed that its search results were not its opinion but a mere reflection of popular belief. Circa 2004, the top result in searches for the term “Jew” was an antisemitic website. While the content of the website was clearly the expression of its creator’s ideas, the source or cause of the recommendation itself was (and remains) less clear. In this instance, Google argued that its recommendations did not reflect the company’s values or beliefs – it was not Google’s opinion, it was a pure reflection of what was popular online. The results were the sum of judgments from a crowd of anonymous others who had linked to or interacted with the antisemitic website. These crowdsourced judgments or decisions are not produced by a set of people deliberately working together towards an end, but rather by a variety of online interactions with varying and often opaque causal relations to the algorithmic output.

Again, the company was positioning itself in the most advantageous legal position regarding its search results: in this case, distance, and a lack of responsibility. Yet, at the same time, Google’s arguments demonstrate how algorithmic outputs are both like and unlike opinions. Because recommendations are shaped by the values and priorities of their designers, creators do bear some responsibility for them. But they are also not equal to the expression of a singular set of designers. In other words, recommendations are not equal to the classic, liberal model of speech. Algorithmic expression is something different: speech without speakers, or speech unmoored from personhood.

Attempting to think through the implications of algorithmic systems via the classic models of speech and expression isn’t helpful, but that hasn’t stopped Google and other companies from trying. The presumption of a direct link between expression and intentionality, and of an agent (like an individual human person) behind the speech, has been central to the corporate opportunism outlined above. Google has used these arguments to position itself as a classic speaker, or person, who can claim legal rights – and a voice in the public sphere. These arguments would have us ask: who is really speaking? But that’s the wrong question. Searching for a particular agent or speaker in cases of algorithmic expression isn’t likely to help us think through the complex ethical and political questions surrounding these systems because algorithmic outputs are the products of dynamic systems and many different (often unwitting) actors. Petersen:

Instead, algorithmic expression might present us with the opportunity to rethink the limits of our current conceptions of what it means to speak as a person. As algorithms increasingly define the ways we know the world in the 21st century, we need more ways to trace lines of causality and intent in expression. We need language and frameworks that will allow us to recognise new forms of agency and responsibility – attenuated forms that fall below the threshold we imagine for human speakers.

Rather than thinking about online recommendations as the opinions of a company or a set of engineers who designed the system, we might understand them as speech with a different structure, exhibiting a different sort of expression and agency. Algorithmic utterances are more than just an amalgamation of the decisions and actions of designers and others at the companies who build them. When you look at your search results and other forms of recommendations, you’re also seeing, all at once: a weave of mere data; the values or goals of a company, brand or set of designers; the opinions of others who have read, watched or linked to the results you are looking at (and to results you do not see); reflections of the archived search histories of myriad users – including you. This kind of distributed expression is not proof that algorithms are persons, or that their ‘opinions’ really are like those of humans, or even companies. Instead, it suggests something we’re perhaps even less prepared for: a way of thinking and talking about speech without the need to find a fully intentional speaker lurking somewhere behind it.

More to come.