Missing documentation and obsolete environments force participants in the Ten Years Reproducibility Challenge to get creative

9 November 2020 (Chania, Crete) – This past September (which seems eons ago) “Nature” magazine and the French National Institute for Research in Digital Science and Technology (based in Bordeaux) had a Zoom chat on the Ten Years Reproducibility Challenge. Conceived in 2019, the challenge dares scientists to find and re-execute old code, to reproduce computationally driven papers they had published ten or more years earlier. Participants were supposed to discuss what they learnt at a workshop in Bordeaux this past June, but COVID-19 scuppered those plans. The event has been tentatively rescheduled for June 2021. Some notes from the chat. I pretty much took this from my Otter.ai transcript so apologies for typos/syntax error.

As Nicolas Rougier tells it, what he needed was a disk. Not a pocket-sized terabyte hard drive, not a compact disc – an actual floppy disk.

For those readers who missed the 1980s, the original floppy disk was a flexible, flimsy disk inside a square sleeve with a hole in the centre and a notch in the corner, and a couple of hundred kilobytes of storage. In the 1983 cold-war film “War Games”, high-school hacker David Lightman uses one to break into the school’s computer and give his girlfriend top marks in biology; he later hacks into a military network, narrowly averting a global thermonuclear war.

Rougier’s need was more prosaic than David Lightman’s. He just wanted to transfer a text file from his desktop Mac to a relic of the computational palaeolithic: a vintage Apple II, the company’s first consumer product, introduced in 1977.

Rougier is a computational neuroscientist and programmer at INRIA, the French National Institute for Research in Digital Science and Technology in Bordeaux. That file transfer marked the final stage of his picking up a computational gauntlet he himself threw down: the Ten Years Reproducibility Challenge I noted above.

Although computation plays a key and ever-larger part in science, scientific articles rarely include their underlying code, Rougier says. Even when they do, it can be difficult for others to execute it, and even the original authors might encounter problems some time later. Programming languages evolve, as do the computing environments in which they run, and code that works flawlessly one day can fail the next.

Some background: in 2015, Rougier and Hinsen launched ReScience C, a journal that documents researchers’ attempts to replicate computational methods published by other authors, based only on the original articles and their own freshly written open-source code. Reviewers then vet the code to ensure it works. But even under those idealized circumstances — with reproducibility-minded authors, computationally savvy reviewers and fresh code — the process can prove difficult.

The Ten Years Reproducibility Challenge aims “to find out which of the ten-year-old techniques for writing and publishing code are good enough to make it work a decade later”, Hinsen says. It was timed to coincide with the 1 January 2020 ‘sunset’ date for Python 2, a popular language in the scientific community, after 20 years of support. (Development continues in Python 3, launched in 2008, but the two versions are sufficiently different that code written in one might not work in the other.)

“Ten years is a very, very, very, very long time in the software world,” offered Victoria Stodden, who studies computational reproducibility at the University of Illinois at Urbana-Champaign. In establishing that benchmark, she says, the challenge effectively encourages researchers to probe the limitations of code reproducibility over a period that “is roughly equivalent in the software world to infinity”.

The challenge had 35 entrants. Of the 43 articles they proposed reproducing, 28 resulted in reproducibility reports. ReScience C began publishing their work earlier this year. The programming languages used ranged from C and R to Mathematica and Pascal; one participant reproduced not code but a molecular model, encoded in Systems Biology Markup Language (SBML).

Akin to archaeological digs for the digital age, participants’ experiences also suggest strategies for maximizing code reusability in the future. One common thread is that reproducibility-minded scientists need to up their documentation game. “In 2002, I felt like I would just remember everything forever,” says Karl Broman, a biostatistician at the University of Wisconsin, Madison. “It was only later that it became clear that you start to forget things within a month.”

We redo science

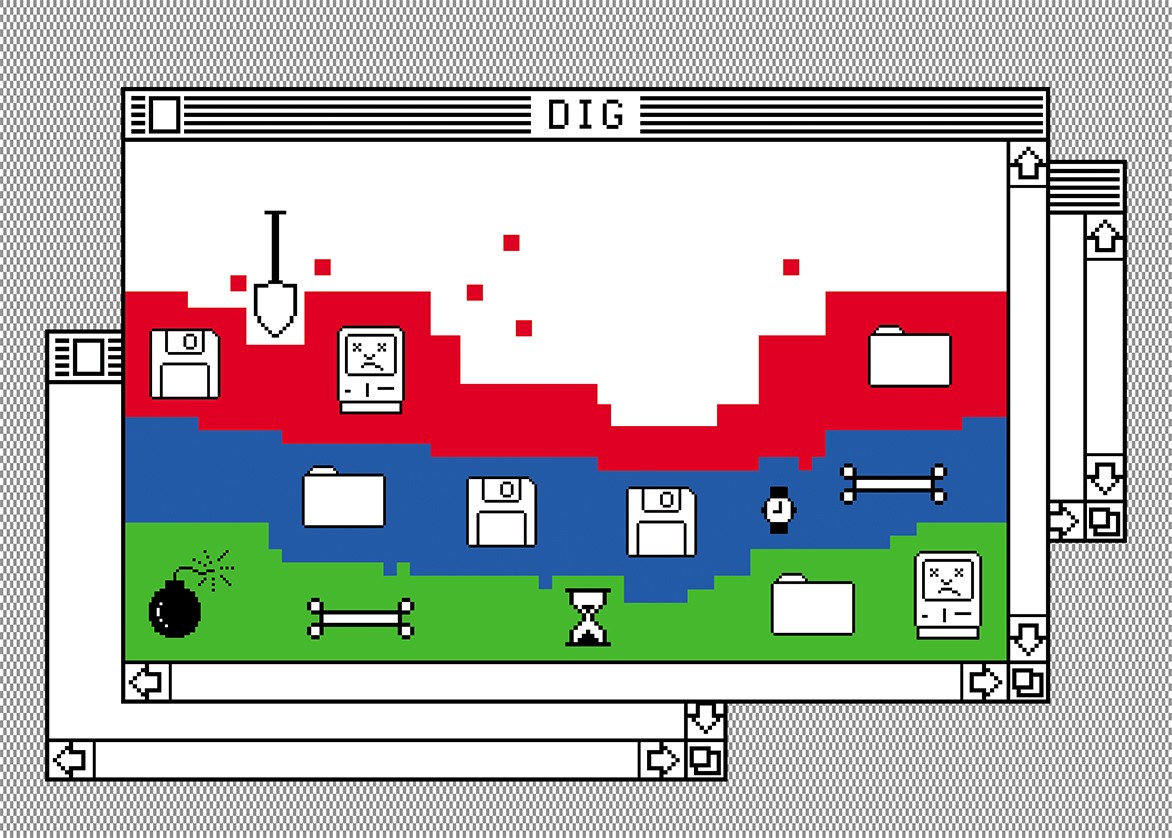

Rougier’s entry reproduces the oldest code in the challenge, an image magnifier for the Apple II that he wrote aged 16 and published in a now-defunct French hobbyist’s magazine called Tremplin Micro. (The oldest scientific code in the challenge, described in an as-yet-unpublished paper submitted to ReScience C, was a 28-year-old program written in Pascal for visualizing water-quality data.) Thirty-two years later, Rougier no longer remembers precisely how the code, with its arcane AppleSoft BASIC instructions, works — “which is weird, because I wrote it”. But he was able to find it online and make it run on a web-based Apple II emulator. That, he says, was the easy bit; the hard bit was running it on an actual Apple II.

The hardware wasn’t the problem — Rougier had an Apple II in his office, salvaged when a colleague was cleaning out their office. “For the younger people it’s, ‘oh, what’s this?’,” he says. “So you explain, ‘this is a computer’. And for older people, it’s, ‘oh, yeah, I remember this machine’.” But because the Apple II pre-dates both USB cables and the Internet — and because modern computers cannot directly talk to old disk drives — Rougier needed some custom hardware, not to mention a box of vintage floppies, to allow the computer to load the code. Those he found on Amazon, marked ‘new’ but dating from 1993. After triple-writing his data to ensure the bits were stable, the disks worked.

Bruno Levy, a computer scientist and director of an INRIA research centre in Nancy, reviewed Rougier’s write-up. Levy also has an Apple II, and posted a short video of the result to Twitter. With a sturdy ‘click clack’ at the old-school keyboard, he calls up the code and runs it, a stylized “We redo science!” screen rendering slowly in monochromatic green.

Extinct hardware, dead languages

When Charles Robert, a biophysical chemist at the CNRS in Paris, learnt about the challenge, he decided to use it to revisit a research topic he hadn’t looked at in years. “It gave me an additional kind of kick to get going in that direction again,” he says.

In 1995, Robert was modelling the three-dimensional structure of eukaryotic chromosomes in computational notebooks running Mathematica, a commercial package. Robert has Mathematica on his MacBook, but for fun, he spent €100 (US$110) on a Raspberry Pi, a single-board hobbyist computer that runs Linux and has Mathematica 12 pre-installed.

Robert’s code ran largely without issue but exposed difficulties that can arise with computational notebooks, such as deficiencies in code organization and code fragments that are run out of order. Today, Robert circumvents these problems by breaking his code into modules and implementing code tests. He also uses version control to track changes to his code and notes which version of his software produced each set of results. “When I look at some of my old code, I cringe sometimes and think how I would do it better now,” he says. “But I also think that process helped to lock in some of the lessons I’ve picked up since then.”

Robert’s success in the challenge is typical: only two of the 13 reproducibility write-ups published so far document failed attempts. One was from Hinsen, who was stymied by the magnetic tapes on which he methodically stored his code in the early 1990s. “That’s the problem of actually making backups but not checking that you can still read your backups ten years later,” he says. “At some point you have this nice magnetic tape with a backup, and no reader for it any more.” (Hinsen also published a successful attempt). Other researchers who failed to complete the challenge blamed a lack of time, especially in light of the pandemic.

Another common issue that participants faced was that of obsolete computing environments. In 1996, Sabino Maggi, now a computational physicist at the Italian National Research Council’s Institute of Atmospheric Pollution Research in Bari, used the computer language Fortran to model a superconducting device called a Josephson junction, processing the results with Microsoft Visual Basic. Fortran has changed little in the intervening years, so after a few tweaks Maggi’s code compiled without issue. Visual Basic posed a bigger problem.

Visual Basic is a dead language and long since has been replaced by Visual Basic.NET, which shares only the name with its forefather. To run it, he had to recreate a decades-old Windows virtual computer on his Mac. He loaded it with Microsoft DOS 6.22 and Windows 3.11 (both from around 1994) as well as Visual Basic, using installation disks he found online. “Even after so many years, the legitimacy of installing proprietary software in an emulator might be questionable,” Maggi concedes. But as he had valid licences for these tools at the time of his original research, he says, he felt “at least morally authorized” to use them.

But which version of Visual Basic to try? Microsoft released multiple versions of the language over the years, which were not always backwards-compatible. Maggi could no longer recall which version he was using in 1996, and a basement water leak had destroyed the old notebooks in which he had logged those details. “I had to start from scratch,” he says.

A Mac emulating a 1994 Windows computer to run Microsoft Visual Basic (slide from Sabino Maggi presentation)

Ludovic Courtès, a research engineer at INRIA in Bordeaux, reproduced a 2006 study comparing different data-compression strategies, whose code was written in C. But changes to the application programming interfaces (APIs) that programmers rely on prevented his code from compiling using current software libraries. “Everything has been evolving — except, of course, some of the pieces of software that were used for the paper,” he says. He ended up having to roll back half a dozen computational components to older versions — a ‘downgrade cascade’. “It’s a bit of a rabbit hole,” he says.

Today, researchers can use Docker containers and Conda virtual environments (see my postscript below) to package computational environments for reuse. But several participants chose an alternative that, Courtès suggests, “could very much represent the ‘gold standard’ of reproducible scientific articles”: a Linux package manager called Guix. It promises environments that are reproducible down to the last bit, and transparent in terms of the version of the code from which they are built. “The environment and indeed the whole paper can be inspected and can be built from source code,” he says. Hinsen calls it “probably the best thing we have right now for reproducible research”.

Documentation needed

In his reproducibility attempt, Roberto DiCosmo, a computer scientist at INRIA and the University of Paris, highlighted another common difficulty for challenge participants: locating their code in the first place. DiCosmo tackled a 1998 paper that described a parallel programming system called OcamlP3l. He searched his hard disk and back-ups, and asked his 1998 collaborator to do likewise, but came up empty. Then he searched Software Heritage, a service DiCosmo himself had founded in 2015. “There it was, incredible,” he says.

Software Heritage regularly crawls code-sharing sites such as GitHub plus deep embedded and commercial sites for for cyber security, legal technology, media/NLP, science, etc. software, doing for source code what the Internet Archive does for web pages. Developers can also request that the service archive their repositories, and the challenge rules required participants to do so. DiCosmo didn’t start his search at Software Heritage, because the service did not exist when he developed OcamlP3l. Somebody must have posted his code to the now-extinct repository Gitorious; Software Heritage archived the site before it shut down, bringing OcamlP3l along for the ride.

Of course, finding the code doesn’t mean it’s obvious how to use it. Broman, for instance, reports that missing documentation and “quirky” file organization meant he had difficulty working out exactly which code he needed to run to reproduce his 2003 study. “And so I had to resort to actually reading the original article,” he writes.

“It’s not unusual for the number of lines of documentation [in well-organized programs] to actually exceed your code,” says Karthik Ram, a computational-reproducibility advocate at the University of California, Berkeley. “Having as much of that in there, and then having a broader description of how the analysis is structured, where the data come from, some metadata about the data and then about the code, is kind of key.”

Melanie Stefan, a neuroscientist at the University of Edinburgh, UK, used the challenge to assess the reproducibility of her computational models, written in SBML. Although the models were where she expected them to be, she could not find the values she had used for parameters such as molecular concentrations. Also not well documented were key details of data normalization. As a result, Stefan was unable to reproduce part of her study. “Even things that are kind of obvious at the time that you work on a model are no longer obvious, even to the same people, 10 or 12 years later — surprise!” she deadpans.

Reproducibility spectrum

Stefan’s experience galvanized her to initiate laboratory-wide policies focusing on documentation — for instance, supplementing models with files that say, “to reproduce figure 5, this is exactly what you need to do”.

But developing such resources takes time, Stodden notes. Cleaning and documenting code, creating test suites, archiving data sets, reproducing computational environments — “that’s not something that’s turnkey”. Researchers have few incentives to do those things, she adds, and there’s scant consensus in the scientific community on what a reproducible article should even look like. To complicate matters, computational systems continue to evolve, and it’s hard to predict which strategies will endure.

Reproducibility is a spectrum, notes Carole Goble, a computer scientist and reproducibility advocate at the University of Manchester, UK. It ranges from scientists repeating their own analyses, to peer reviewers test-driving code to show that it works, to researchers applying published algorithms to fresh data. Likewise, there is a spectrum of actions that researchers can take to try to ensure reproducibility (see ‘Reproducibility checklist’), but it can be overwhelming. If nothing else, Goble says, release your source code, so that in future, others can browse it and rewrite it as needed — “reproducibility-by-reading”, as Goble calls it. “Software is a living thing,” she says. “And if it’s living it will eventually decay, and you will have to repair it, and you’ll have to replace it.”

Counter-intuitively, many challenge participants found that code written in older languages was actually the easiest to reuse. Newer languages’ rapidly evolving APIs and reliance on third-party libraries make them vulnerable to breaking. In that sense, the sunsetting of Python 2.7 at the start of this year represents an opportunity for scientists, Rougier and Hinsen note. Python 2.7 puts “at our disposal an advanced programming language that is guaranteed not to evolve anymore”, Rougier writes1.

Whichever language and reproducibility strategies they use, researchers would be wise to put them to the test, says Anna Krystalli, a research software engineer at the University of Sheffield, UK. Krystalli runs workshops called ReproHacks for researchers to submit their own published papers, code and data, and challenge participants to reproduce it. Often, she says, they cannot: crucial details, obvious to the authors but opaque to others, are missing. “All the materials that we’re producing, if we don’t actually use them or engage with them then we don’t really know if they are reproducible,” Krystalli says. “It’s much harder, actually, than people think.”

We briefly discussed how the science community is making code accessible with cloud services, “container platforms” that let researchers run each other’s software, check the results, and store the software. If time permits, I will expand this Postscript, but herein a few points:

Docker is a software tool that generates “containers” – standardized computational environments that can be shared and reused. Containers ensure that computational analyses always run on the same underlying infrastructure, fostering reproducibility. Docker thereby insulates researchers from the challenges of installing and updating research software. However, it can be difficult to use.

But many scientists are developing templates and becoming very become proficient in migrating Docker configuration files (called “Dockerfiles”) from one project to the next, making minor tweaks and getting them to work.

Further, a growing collection of services allows researchers to sidestep such confusion. Using these services — which include Binder, Code Ocean, Colaboratory, Gigantum and Nextjournal — researchers can run code in the cloud without needing to install more software. They can lock down their software configurations, migrate those environments from laptops to high-performance computing clusters and share them with colleagues. And they stay *preserved* in the cloud. It’s never been easier to understand, evaluate, adopt and adapt the computational methods on which modern science depends.

Scientific software is unique from most other software and often requires installing, navigating and troubleshooting a byzantine network of computational “dependencies” — the code libraries and tools on which each software module relies. Some have to be compiled from source code or configured just so, and an installation that should take a few minutes can degenerate into a frustrating online odyssey through websites such as Stack Overflow and GitHub.

The Alan Turing Institute in London is working on a huge project to simply, adopt and adapt computational methods, and store in the cloud.

And then there is the Swiss idea …

In May 2010, a consortium of European libraries and research institutions entombed a metal box containing our collective “digital genome” in a former Swiss Air Force bunker beneath the Alps, taking inspiration from seed banks and animal genome projects. Though the act was in part a publicity stunt, the box also bore deadly serious news: digital media, the supposedly immortal replacement for analog media, is itself subject to decay, even death. Rather than a perfect replacement for the organic (and thus impermanent) mediums of paper or microfilm, they explain, digital media is in fact “brittle and short-lived.”

Part of this reflects the physical substrate on which digital media is kept: A recordable DVD lasts perhaps 15 years before the dye fades, with some brands showing ill effects in as soon as 1.9 years; magnetic hard drives fare worse. A bigger problem is the rapidly changing nature of file formats and decoding systems, which produce a condition in which a user can rarely open a file created a decade ago without significant difficulty.

NOTE: those of you who have access to the BBC archive will remember BBC’s 1986 “Domesday Project” which was conceived 900 years after the original Domesday Book and intended to be read 900 years into the future. But programmed in the obsolete BCPL language, stored on an equally obsolete laser disc, and requiring a modified Acorn BBC Master computer to view, it was essentially unusable a mere 16 years later. There is, as the consortium’s project dramatized, no such thing as pure information; digital media is always tied to the systems of encoding that make it understandable.

The consortium’s project, named Planets, presents a common narrative from the field of digital preservation. The consortium’s brochure opens with an illustration graphing the declining lifespan of each successive media: clay tablet, papyrus, vellum, and so forth, finishing with magnetic tape, disk, and optical media. And the burial project itself states the case for a digital genome by describing the inevitable changeover from analog to digital:

“We do not write documents, we word-process. We do not have cameras and photo albums, we have digital cameras and Photoshop. We do not listen to radios and cassette.”

The implication, the consortium makes clear, is that each medium has a certain lifespan, after which it becomes replaced by the next one. Yet what is most interesting about this narrative is how it frames media as either living or dead by contrasting the “live” updates of an Internet-connected database with a “dead” one: the term database suggests a living entity; is a dead or decommissioned database still a database?

By doing so, the Planets project picks up on a widespread rhetoric of media as dying or dead, with each “death” and “birth” of a medium said to signify a historical rupture or break. In a historiographic model Paul Duguid termed “supersession,” each successive medium is said to kill off the previous one.

Ah, Victor Hugo essay in Notre-Dame de Paris (which most of you know as The Hunchback of Notre-Dame):

“This will kill that. The book will kill the building . . . The press will kill the church . . . printing will kill architecture.”

If you’ve read the book you know that the novel is full of long “intermissions”, Hugo’s pontifications on all sorts of subjects. He has a very long “contemplation” on how the printing press had replaced architecture as the principal means of conveying meaning to the masses. As Hugo explains, the history of architecture is the history of writing. Before the printing press, mankind communicated through architecture. From Stonehenge to the Parthenon, alphabets were inscribed in “books of stone.” Rows of stones were sentences, Hugo insists, while Greek columns were “hieroglyphs” pregnant with meaning:

Man’s history, France’s history … physical. Written in stone, lead, timber, and now printing Shall this, too, be preserved?

* * * * * * * * * * *

REPRODUCIBILITY CHECKLIST

Although it’s impossible to guarantee computational reproducibility over time, these strategies can maximize your chances.

Code Workflows based on point-and-click interfaces, such as Excel, are not reproducible. Enshrine your computations and data manipulation in code.

Document Use comments, computational notebooks and README files to explain how your code works, and to define the expected parameters and the computational environment required.

Record Make a note of key parameters, such as the ‘seed’ values used to start a random-number generator. Such records allow you to reproduce runs, track down bugs and follow up on unexpected results.

Test Create a suite of test functions. Use positive and negative control data sets to ensure you get the expected results, and run those tests throughout development to squash bugs as they arise.

Guide Create a master script (for example, a ‘run.sh’ file) that downloads required data sets and variables, executes your workflow and provides an obvious entry point to the code.

Archive GitHub is a popular but impermanent online repository. Archiving services such as Zenodo, Figshare and Software Heritage promise long-term stability.

Track Use version-control tools such as Git to record your project’s history. Note which version you used to create each result.

Package Create ready-to-use computational environments using containerization tools (for example, Docker, Singularity), web services (Code Ocean, Gigantum, Binder) or virtual-environment managers (Conda).

Automate Use continuous-integration services (for example, Travis CI) to automatically test your code over time, and in various computational environments.

Simplify Avoid niche or hard-to-install third-party code libraries that can complicate reuse.

Verify Check your code’s portability by running it in a range of computing environments.