[ Um diesen Artikel auf Deutsch zu lesen, klicken Sie bitte hier ]

3 November 2019 (Lisbon, Portugal) – Last week marked the 50th “birthday” of ARPANET, the Advanced Research Projects Agency Network. It was 29 October 1969 to be exact when the first message was sent across the proto-Internet. It was at the University of California Los Angeles, in Boelter Hall, Room 3420.

Room 3420, restored to its 1969 glory [Photo courtesy of Mark Sullivan]

ARPANET was an early packet-switching network and the first network to implement the TCP/IP protocol suite. Both technologies became the technical foundation of the Internet.

By the mid-1960s, ARPA had provided funding for large computers used by researchers in universities and think tanks around the country. The ARPA official in charge of the financing was Bob Taylor, a key figure in computing history who later ran Xerox’s legendary PARC lab (Steve Jobs made a visit and “borrowed” much of its unused technology for the Mac and in-development iPad and iPhone). At ARPA, Taylor had become painfully aware that all those computers spoke different languages and couldn’t talk to each other. Taylor hated the fact that he had to have separate terminals – each with its own leased communication line – to connect with various remote research computers. His office was full of Teletypes. He said “solve this!”

And it was 30 years ago this year that Tim Berners-Lee, then a fellow at the physics research laboratory CERN on the French-Swiss border, sent his boss a document labelled Information Management: A Proposal. The memo suggested a system with which physicists at the centre could share “general information about accelerators and experiments”. Wrote Berners-Lee:

Many of the discussions of the future at Cern and the LHC era end with the question: “Yes, but how will we ever keep track of such a large project?” This proposal provides an answer to such questions.

His solution was a system called, initially, Mesh. It would combine a nascent field of technology called hypertext that allowed for human-readable documents to be linked together, with a distributed architecture that would see those documents stored on multiple servers, controlled by different people, and interconnected. It didn’t really go anywhere. Berners-Lee’s boss took the memo and jotted down a note on top “Vague but exciting …” and that was it. It took another year, until 1990, for Berners-Lee to start actually writing code. In that time, the project had taken on a new name. Berners-Lee now called it the World Wide Web.

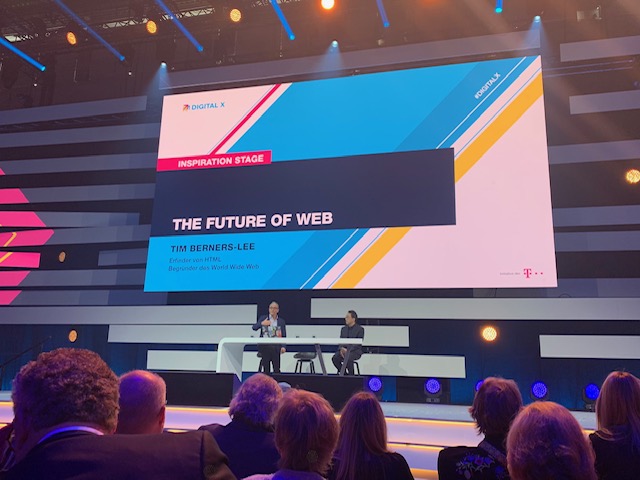

So it was rather fortunate that Berners-Lee was a keynote speaker last week at Digital X, what is becoming Deutsche Telekom’s flagship event for innovation and digital transformation.

Note: I will have a full review of Digital X later this week with a series of video clips from my many interviews at the event. (For a short clip I did during the event, click here). Alas, it shall not include Berners-Lee. I had full press credentials for the event but I missed my assigned slot to interview Sir Tim due to another video interview that ran late. Es ist schade.

Thirty years on, and Berners-Lee’s invention has more than justified the lofty goals implied by its name. But with that scale has come a host of troubles, ones that he could never have predicted when he was building a system for sharing data about physics experiments. He related many issues that troubled him. He had not envisioned its success but was astounded it let to a world where people were so manipulated. And one of his biggest regrets was something he did: the way he decided to “bootstrap” the web up to something that could handle a lot of users very quickly: by building on the pre-existing service for assigning internet addresses, the domain name system (DNS), he gave up the chance to build something better. He said at the time that seemed like a good idea, but it relied on it being managed benevolently. Today, that benevolent management is no longer something that can be assumed.

So we are fifty years on since the delivery of the first message across the proto-Internet, and I thought about where we are .. and if you read this blog you have read much of my pontifications in previous posts. The Internet has been mainstream in most markets for more than two decades. And the entire environment within which technology innovation occurs is vastly different to even twenty-five years ago.

Today we struggle with how we manage/can we manage our innovation. In a field like medicine, we’ve established a rigorous and scientifically-validated approach to testing and approving drugs, based on clinical trials and randomized controlled tests. In the finance and insurance industries, new entrants must comply with strict rules that protect the consumer. Yes, we fall down on that many times. Capital adequacy and anti-money laundering requirements ensure the integrity of the financial system .. supposedly. We fall down on that, too.

But the tech industry (and yes, that really needs to be defined) has never had such a framework. It has enjoyed “permissionless innovation” since the advent of the Internet fifty years ago. The open platform enabled entrepreneurs to try new things without getting permission from regulators or, indeed, anyone else. This is why we got streaming audio in the mid-nineties, while the traditional broadcast industry remained heavily regulated. And why we’ve had a flourishing ecology of remarkably large products like Skype or Wikipedia, alongside weird, wonderful niche products … with nobody saying “you cannot do that”.

Yes, the permissionless approach served us well. As tech guru Azeem Azhar recently opined:

Can you imagine getting a search engine as good as Google, if it had to be approved by even the most forward-thinking of regulators? If newspaper lobbyists had had their way, would blogging have ever existed, let alone flourished?

Yes, but … permissionless innovation also enabled other not-so-cool things like those toxic Usenet newsgroups, Chatroulette and 4Chan.

So now the tech regulation cohorts have an alternative approach … the “precautionary principle”, or as it is sometimes shortened “PP”. It sort of sits between permissionless innovation and full-on regulation. U.S. regulators and legislators are looking at it.

Me? Not in favor of it. Because it falls into that horror category — fuzzy. It favors looser interpretations of what harm might be created. To that extent, it can be subjective and open to lobby and the ill-informed excesses of public opinion. And if you keep up on current events public opinion is often not a great guide to complicated technical problems, especially where there are systemic effects.

Ok, permissionless innovation, industry self-regulation and industries-cosied-up-to regulators have not been a panacea, either. Yes, the “don’t ask permission culture” lead to many things we might not have created in the early days of the internet. But now we are suffering from the hangover.

In his book How the Internet Happened (only published last year but destined to become a classic), Brian McCullough relates that loosely coupled dumb networks which put intelligence on the edge (aka the internet) allowed for innovation on the edge. In the 1990s, the phone companies hated it. Their power arose for centrally-controlled circuit switching. If they had had their way, the internet protocol would have played second-fiddle to proprietary virtual circuits based on ATM or SDH. And, if they had succeeded, we would never have seen Geocities, Viaweb, Skype, Yahoo, eBay, Instagram, Amazon and, most digital services you use daily.

And that entire environment transformation from twenty-five years ago? YIKES! :

– The domains of operation are too important. Information access or self-publishing, the products of the mid-90s, are valuable but ultimately niche. But today’s technology innovators are tinkering with insurance, financial services, healthcare, even our DNA.

– The tech industry today is simply too big and has tremendous access to capital. Entrepreneurs know how to “blitzscale”, that is, grow their companies globally very quickly. Capital markets are willing to support them however barefooted and Adam-Neumannish they are. The biggest firms … Alphabet, Facebook, Amazon, Tencent and Baidu … are sovereign-state in scale. This actual or potential leviathanhood demands we ask them for more prudence. But we are flummoxed how to do it.

– There are emergent effects from many of these innovations which, in a permissionless environment, are borne collectively by society or simply weigh on the vulnerable. For example, Uber and Lyft have increased congestion in cities while reducing driver wages. These firms’ founders and earliest investors make out like bandits.

– Our societies are incredibly interconnected. A decade ago, the global financial crisis took a bunch of mortgage defaults by sub-prime-rated American homeowners in and magnified them into the worst world-wide (ok, Western world-wide) financial crisis in history. This crisis brought General Motors to within hours of bankruptcy, and debilitated factories around the world.

CONCLUSION

No, I have no answers. As I have noted in other posts, to be an informed citizen is a daunting task. To try and understand the digital technologies associated with Silicon Valley — social media platforms, big data, mobile technology and artificial intelligence that are increasingly dominating economic, political and social life – has been an even more daunting task. The breathtaking advance of scientific discovery and technology has the unknown on the run. Not so long ago, the Creation was 8,000 years old and Heaven hovered a few thousand miles above our heads. Now Earth is 4.5 billion years old and the observable Universe spans 92 billion light years.

But I think as we are hurled headlong into this frenetic pace we suffer from illusions of understanding, a false sense of comprehension, failing to see the looming chasm between what our brain knows and what our mind is capable of accessing.

It’s a problem, of course. Science has spawned a proliferation of technology that has dramatically infiltrated all aspects of modern life. In many ways the world is becoming so dynamic and complex that technological capabilities are overwhelming human capabilities to optimally interact with and leverage those technologies.

But being a opsimath, I shall carry on and write. And be glad I can attend events like Digital X to help me wrap my brain around these complexities.